Why are we doing this?Right from our inception, learning has been a way of life at Ideas2IT. While we have always inculcated the learning habit as a part of our work, we just decided to take it up a notch. The only question on our minds: How?Identifying and betting on cutting-edge technology is a big part of who we are. At present, NLP and Chatbots are two technological trends that have piqued our interest. We've decided to invest in them and as a part of our way of learning, we decided to look for a real-world problem where we can implement them. What better place to look than within?The amount of data we have on our hands has been on the rise. We needed to find an efficient way of collecting said data, and do so efficiently. We decided to create a chatbot to collect and manage data. And we decided to make a competition out of it.Presenting, the Ideas2IT Chatbot Hackathon!So, what are the rules then?With the availability of wonderful platforms like AWS LEX and Lambda, the creation of a chatbot has become that much more interesting.We have three teams that are going to attempt to create a workable and adaptable chatbot. The bot will have to be interactive and data needs to be captured at the backend in a spreadsheet. And guess what: Just like any other game, this activity is time-driven. The team to give us the best chatbot on or before 30th June 2017 wins!We keep talking about ‘having fun at work’. How’s this for fun?What makes a winner bot?

- Impeccable Product Design

- Tech Approach

- Usability

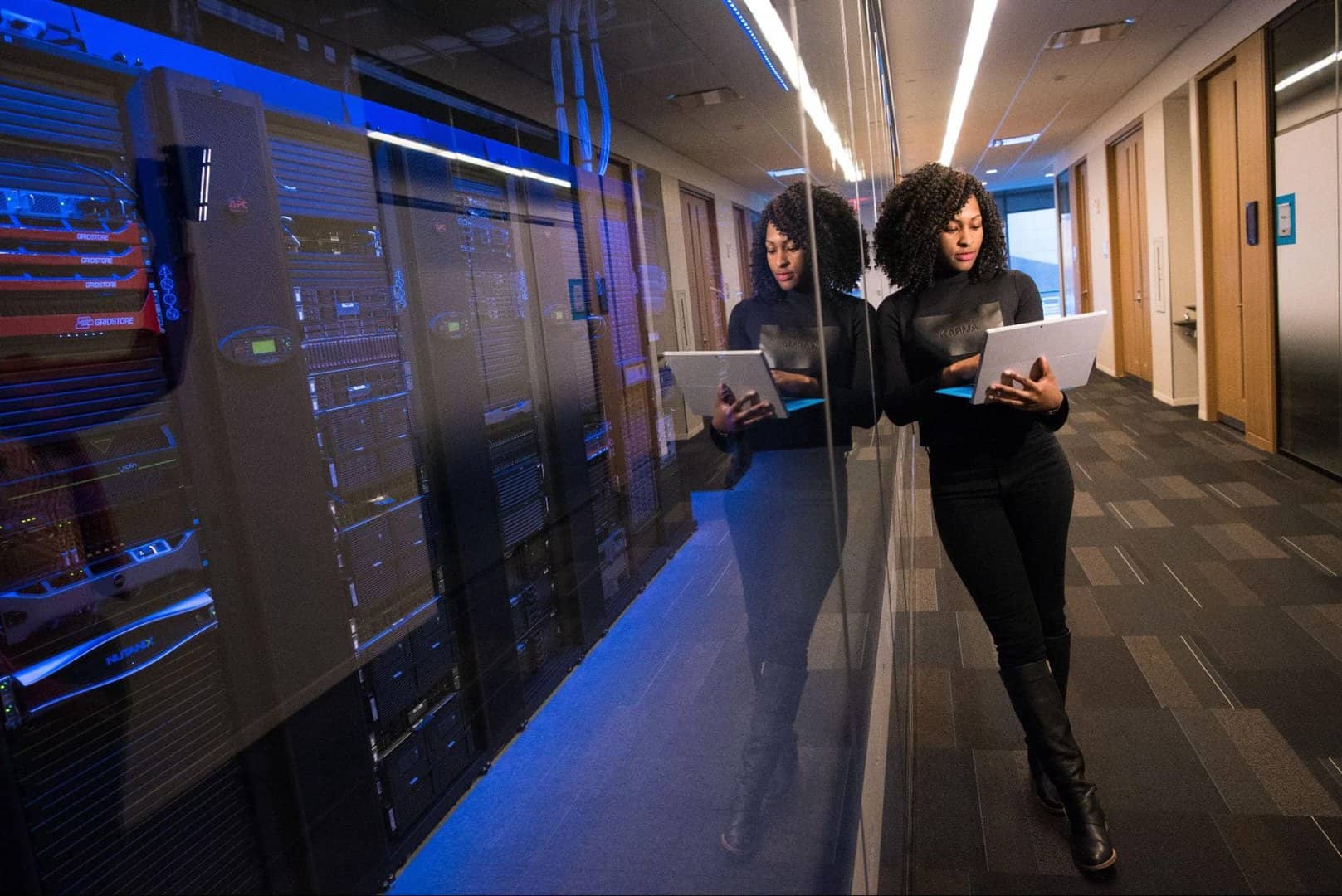

Also, when we announce winners, we do it in style! Watch this space for updates!The hackathon teamsWithout further ado, here are the three teams that are going head to head in the race to build a workable chatbot for us.Rajasekar & TeamVeera & TeamRamanavel & TeamMadanLokeshKumar AAswin RajYogesh KumarRajeshDinakaranPraveen RajSoundarKathiresanRajasankarSundareshMohanVinothGowthamanPravin Kumar TAnanthbabuPremWe can’t wait to see how these teams put their ideas to work and get rolling.Let the games begin!Are you looking to build a great product or service? Do you foresee technical challenges? If you answered yes to the above questions, then you must talk to us. We are a world-class custom .NET development company. We take up projects that are in our area of expertise. We know what we are good at and more importantly what we are not. We carefully choose projects where we strongly believe that we can add value. And not just in engineering but also in terms of how well we understand the domain. Book a free consultation with us today. Let’s work together.

.png)

.png)

.webp)