The Emergence of Voicebots

Let us consider the real world of today. If a user wanted to buy a sensible pair of clothing for his daily work today, he would either

- walk into a store, wander about and try out different options,

- walk into a store, find a salesperson, explain his needs and get recommendations or

- open up the website and browse the collections.

While the first two options are more natural to how humans operate, it is time consuming and more tedious than having to click and order from the comfort of his couch. But this user wants his apple and eat it too, as do most of us. We are looking forever for personalized content, personalized recommendations and personalized everything with as minimal required as work from us.Even if the websites and apps of today have personalization built in, they are mostly content related personalizations, it still feels like you need to do everything. Click and search and browse and choose and pay and track. There has been a growing need to make it easier than this. What if the user gets a personal assistant to help him choose better and manage all mundane actions. That’s where voice assistants and chatbots come in. These are systems that connect to service providers while having targeted knowledge about each user’s behavior and preferences.With chatbots you get a streamlined interface of actual communication. It is structured to understand the user’s needs and choices and suggest options. It is much more natural interaction than a point and click website. And of course, engineers had to make it more awesome. Users can now “talk” and get their scheduling taken care of, have their finances managed, get their daily dose of news, get their groceries done, be reminded of their tasks and even get suggestions based on interest. Just like having an ever helpful and competent personal assistant, but one with a more specific focus.Amazon, Google, Microsoft, Facebook and Apple, with a bunch of other highly competent technological teams have been releasing their voice products in the past couple of years with a lot of potential and uses. They have

- added voice assistants in their mobile phones like Siri, Google Assistant etc (check our previous blog on a primer into Voice Assistants in mobile phones to integrate with third party custom apps and do native tasks as well

- introduced new products in the market like Amazon Echo, Google Home and Apple HomePod that act mainly as “smart home managers” taking care of the smart devices at home (like thermostat and your TV), reminding you of appointments, helping you with usual purchases, playing your music and answering your generic questions.

Wait, Why Should You Care? If you have been following the news over the past one year, you are sure to have caught something to the tune of “people are using messaging platforms more than social networks”. And the scale here is actually thousands of millions of monthly users and not just a specific group even. Everyone seems to be “chatting” online and of course businesses are running down to where the users are. You want in too, don’t you?There is also a slow and steady growing concern among everyone that installing multiple apps in their phones for doing multiple things is not “natural”. For some repetitive uses, native apps definitely make sense but if the actions your users perform are spread across time periods and different each time, they would rather look for an easier way to get it done than having to install that one app for that one time.So, the logical move of the industry was to integrate their services into existing messaging platforms like Facebook messenger and iMessages. They wanted to have “bots” that “communicate” with you when needed and solve your needs. When you want to buy a pair of shoes, pick up a conversation with the Nike bot in Facebook.While it looked like these messaging platforms were all set for the long haul, with the tremendous strides happening in natural language processing and machine learning, adding voice to the chatbots so that users can have a real conversation with the service was the obvious logical next step.Still doubtful?If you are still not convinced your service needs to adapt Voice, just look at the numbers —

- According to The Verge article - Amazon beat expectations in the fourth quarter of 2017, continued to grow its Amazon Web Services business, and said that it sold “tens of millions” of Echo hardware devices last year, with its cheapest products taking up the bulk of the sales in 2017.

- Google boasts of its sales numbers here. They say, "we sold more than one Google Home device every second since Google Home Mini started shipping in October (2017).” At that rate, it amounts to around 10 million devices till date.

- Apple’s HomePod went on sale last week and as usual is expected to sell a whole lot of them every quarter.

Understanding when to have a Voice Interface to your bot:Context drives the decision to lean towards voice interface or visual interface for your chatbot. Consider the following cases

- you are walking to your car with your hands full of groceries and a baby. It is easier for you to “talk” to Siri and get your car’s doors open rather than opening the companion app for your car in the phone.

- you are driving your car and you see a emergency road block asking you to take a diversion. It is easier for you to talk to Siri again (in CarPlay) and ask for new directions to your destination and then “look” at the car’s dashboard to get a feel of the suggested route. Siri can get back to "talking" to you as you drive for every turn you need to make.

So, defining the context and understanding the viability of the voice vs visual interfaces makes or breaks your solution. Check out our previous article on voice user interface design to find out the best practices.For example, we see a lot of retailers adapting the voice interface quite quickly actually. It is easier for a user to order his regular groceries from Amazon, Whole Foods or his local provider using just his voice. In fact, statistics show that the percentage of users using Amazon Echo or Google Home to order their products have increased manifold towards the end of 2017.Another interesting domain is with cars and navigation. Apple introduced CarPlay and Google has Google Auto. Both help with attending calls, responding to texts, using maps for navigation, control internal car systems for temperature and mechanics, play music and even tell your notifications.And in an exciting turn of events, Amazon and Microsoft have announced their partnership to expose their services to Alexa and Cortana so that users of one platform/device can utilize the services from the other.What goes into building a Voicebot?There is something important to understand before we dive any further into this world. These bots

- are designed to have a specific purpose

- are programmed to understand casual natural language only to an extent (these are limited into the scope of data that they are trained in)

Remember these

- While it makes sense to have AI built in, it is totally fine to build a bot that strictly follows rules and doesn’t require the vastness of even a specific functional AI.

- You don’t need to have expertise in AI to have your bot support it, there are numerous plug and play services ranging from the complex IBM Watson to third-party providers for helping to extend your ecommerce service

- Successful bots have a great user experience built in. So, plan a neat workflow.

- Decide which platform/ecosystem the bot is going to be supported on. Your options now are Apple, Google, Microsoft, Amazon.

- Finalize whether it is going to be a standalone bot or a bot extending an app’s services.

When building the voicebot, you would typically have to follow these steps -

- Design your solution so that the bot is available always.

- Make it scalable to handle an increase in load.

- Enable simple or complex (as is the case) ability to understand user request.

- Enable handling the user request to perform an action.

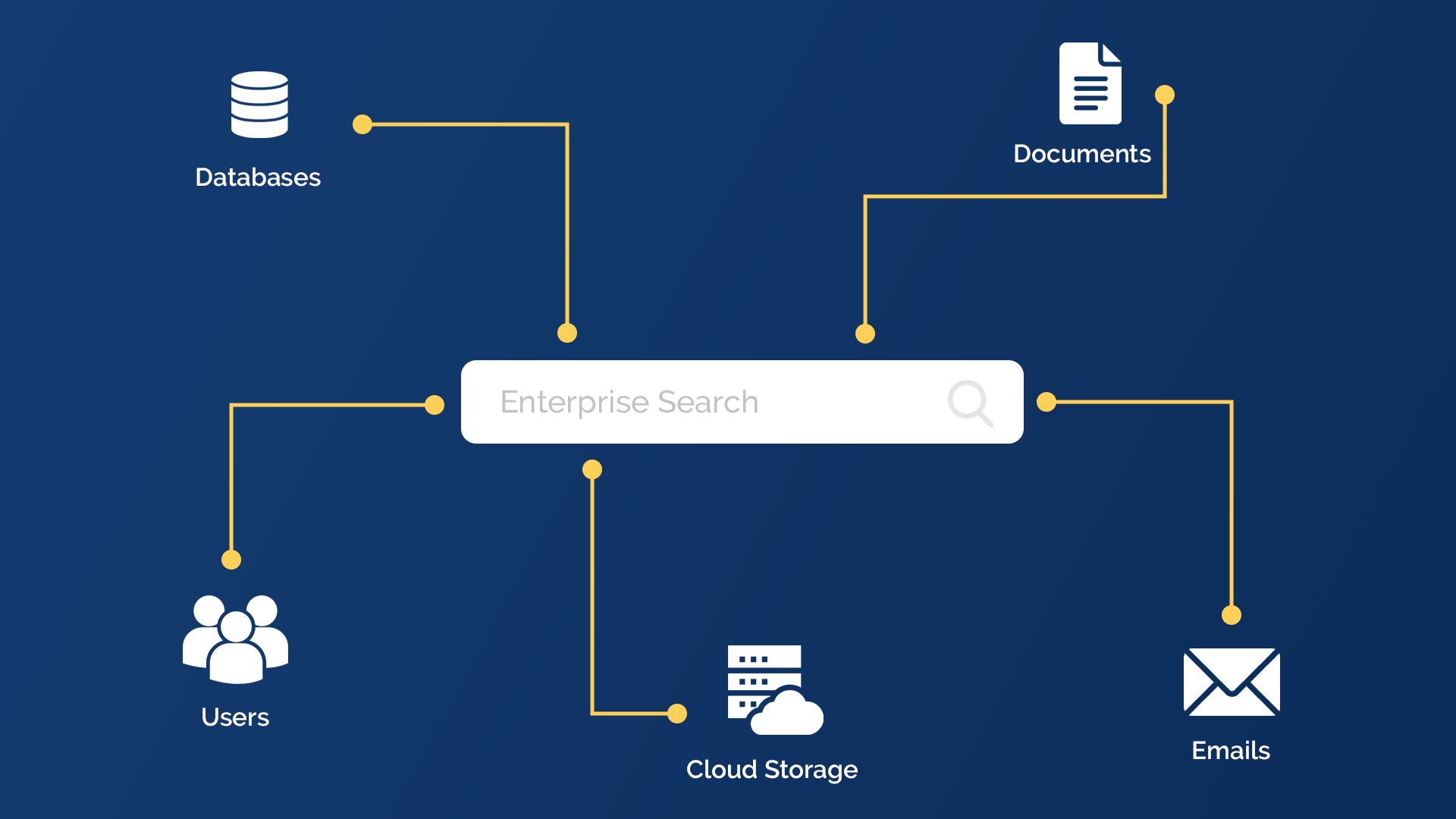

- Add integrations to connect to internal APIs or external services as necessary.

- Respond with results back in natural language.

- Enable human takeover or handoff when need arises.

- Use visual cues to the conversation if possible to show processing (Possible with Siri/Google Voice in mobile phones and even with Alexa in Amazon Echo/ Apple HomePod/ Google Home. Difficult with telephonic voice agents)

If you want to go the extra step, you need to consider these —

- Make your bot continually learn from its interaction with users - both generic and user-specific.

- Have deep integration with services and provide a near-real life experience.

- Don’t over promise and fail, make the goal of the bot very clear.

- Integrate efficient error handling and recovery.

- Build escalation workflows that involves live human support.

- Track active and engaged sessions of a user to combat churn and know retention rates.

- Provide ways to restart the entire interaction.

- Make response times shorter than expected from websites and apps.

- Support for multiple natural languages.

- Enable active learning or supervised learning where new flows, analysis of conversations and corrections are made regularly.

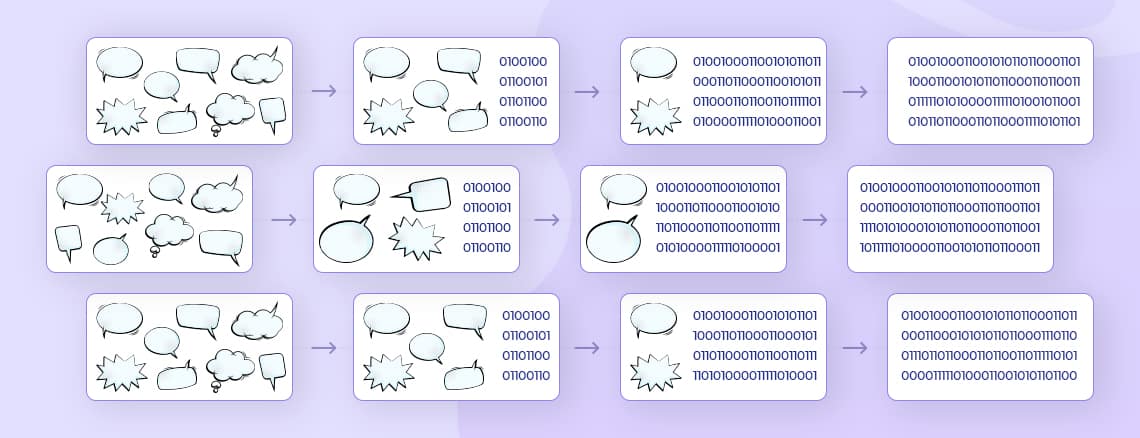

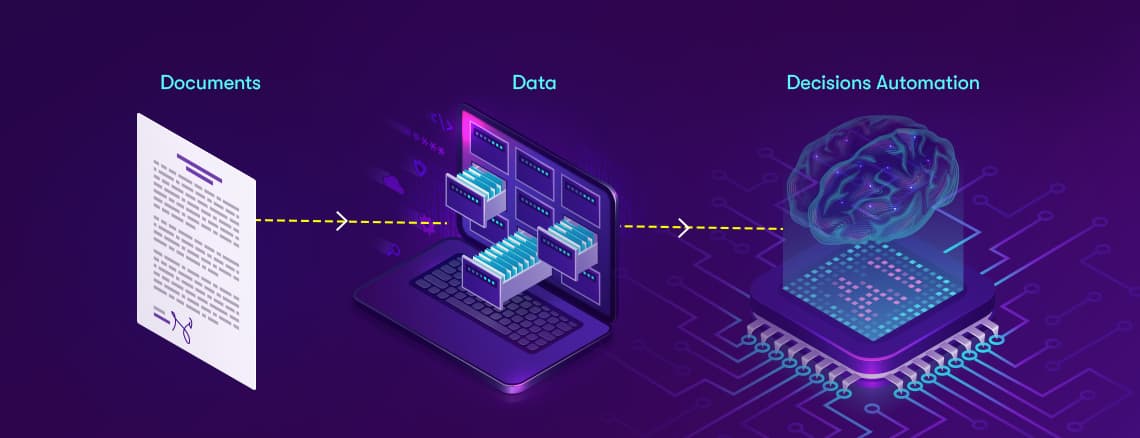

What typically goes into the functioning of these voice assistants' services?Let us throw in something to excite the tech enthusiast in you -- in their basic anatomy, each of the existing voice services, will need to have these three components,

- Speech to Text - Recognize and transform incoming audio data into textual transcriptions

- Language Processing - Identify intents and parameters from the transcriptions and determine next steps. This involves training the service in specific areas within the defined scope and programming response formats to be populated with data.

- Text to Speech - Translate the response to user understandable text and generate as audio stream to playback to user

Challenges with Voicebots

- Keeping latency to a minimum. With computing interfaces, although users expect fast response times, they assume and understand the latency in performing a user request. This can be quite challenging to achieve in a voice system where the user is simply waiting on a response and expects an immediate reply.

- Handling change in conversation flow. Voice agents must pull the user back to the intended purpose of the conversation without sounding robotic (defeats the main purpose) when user strays away.

- Understanding emotions and tone. This is quite challenging to achieve and very few services (eg Watson Tone Analyzer) offer this support today

- Handling voice quality. The efficiency of the voice agents depends on the underlying voice recognition service. Challenges are background noise, multiple users and accents are some areas most services are working on improving.

So, what next?Did we interest you enough to consider adding Voice to your service? Do you us to explore the possibilities for you? Do get in touch with us. Also, check out our other blog posts with more details on Voice Assistants and Chatbots.

.png)

.png)

.webp)