1. Why Generic LLMs Break in Enterprise

Understand why out-of-the-box models fail with compliance, policy-heavy content, fragmented internal data and the cost of ignoring this gap.

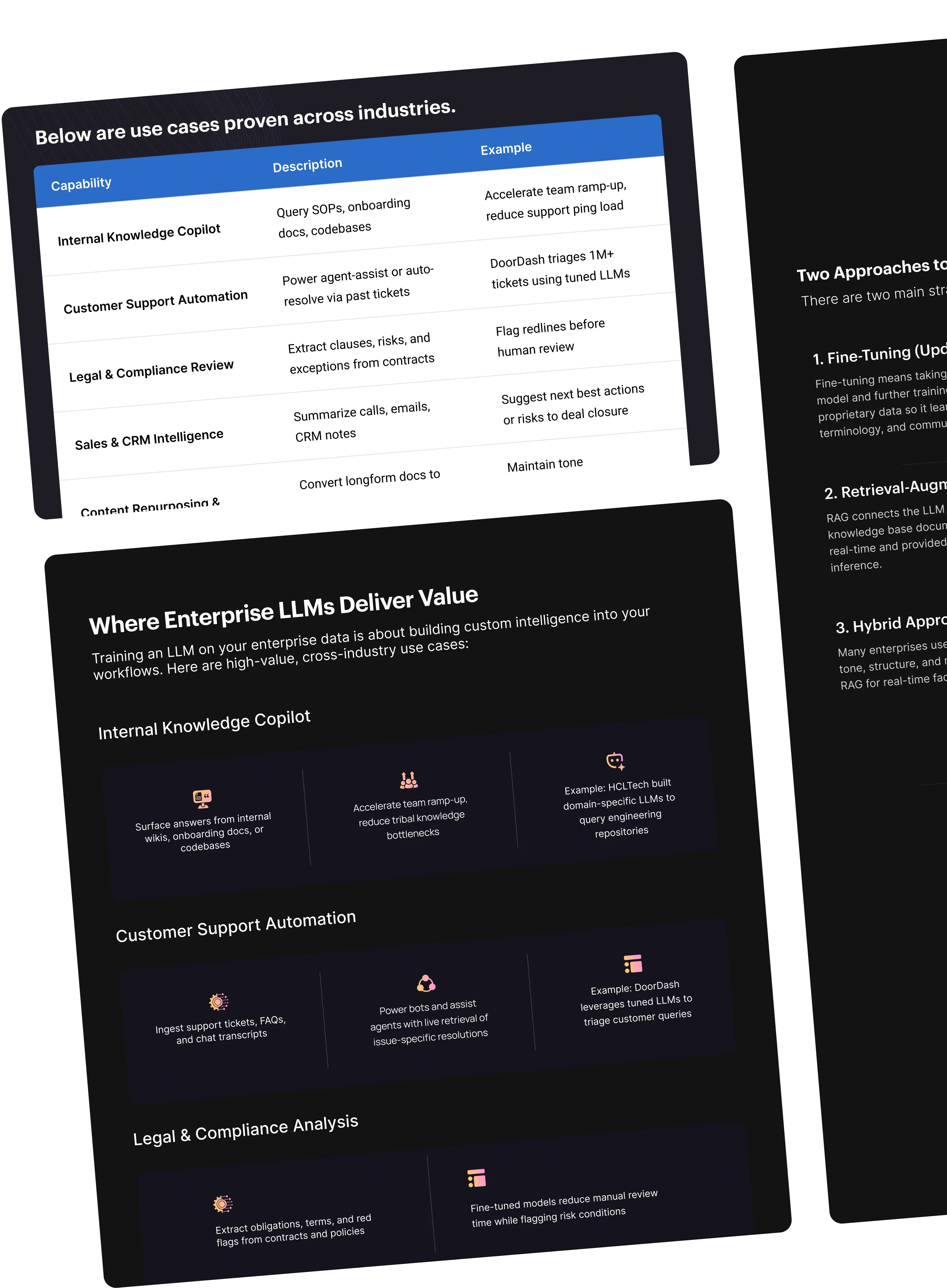

2. Fine-Tuning vs RAG: The Real Differences

Clear decision criteria for when to fine-tune, when to use RAG, and why many organizations combine both. Includes a quick comparison of accuracy, latency, cost, and compliance trade-offs.

3. Preparing Your Enterprise Data

Practical guidance on cleaning, de-duplicating, masking PII, and structuring documents. Learn how to format data for fine-tuning vs RAG so your model actually performs.

4. Architecture and Evaluation Framework

What a production-ready setup looks like: model hosting, GPU sizing, vector DB options, and orchestration tools. Plus, how to test for hallucinations, retrieval quality, and latency before deployment.

5. Governance and Lifecycle Management

The controls that keep LLMs useful and compliant at scale, feedback loops, audit trails, retraining cadence, and cost optimization strategies.

.png)