Representing Audio Data: An In-Depth Look at STFT and MFCC

TL'DR

Introduction:

This blog is about how audio data can be represented as STFT, MFCC and we show how fourier transformation helps in getting MFCC and STFT from audio.Later on we show how MFCC is advantageous over STFT.

This blog is actually Part-2 from the Audio classification on Edge AI blog series. To know about the Part-1 of the series where we saw how audio data is and how its related to Fourier transform.I am going to paste the link to Part-1 of the series below:

Understanding Short-time Fourier transform (STFT)

Short Time Fourier Transform was developed to overcome few limitations of traditional Discrete Fourier Transform.

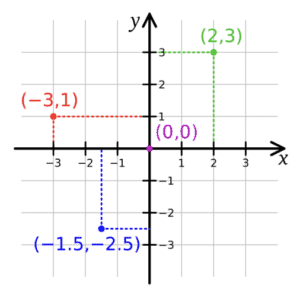

STFT provides a compromise between the time and frequency representations of the signal under analysis. Though STFT is a reasonably good tool for signal analysis, its ability to represent the signal in the presence of a non-stationary and time varying noise is poor.The below image gives an Cartesian coordinate system(xy coordinate system) where a single point in this co-ordinate system is denoted by (x,y) where x,y belongs to real numbers.

Similarly (Time amplitude) domain coordinate system is Time vs Amplitude.

What I mean is , from the table below we can see what X corresponds to and Y corresponds to in the specific coordinate system.

Name of Coordinate systemX (Horizontal axis)Y (Vertical axis)

1. CartesianAny quantitative variable (Real number)Any quantitative variable (Real number)

2. Time Amplitude domainTime(seconds)Amplitude(decibel)

3. Frequency Amplitude domainFrequency(hertz)Amplitude(decibel)

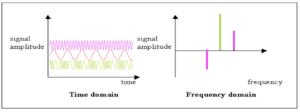

The diagram below can give you an insight of how Time Amplitude & Frequency Amplitude looks like

Now coming back to breaking down the STFT terminology.

STFT - FT stands for Fourier transform. So what it means is that it is transformation algorithm like Fourier but underneath a different logic lies .But how it is different?. Let see below.

STFT - Short Time . This means it takes a window of specific time and runs fourier transformation on that short time window.

Since Fourier transform is in Frequency Amplitude domain , we dont know anything about the progress of frequency along the time. Whether the frequency is starting higher at the beginning of audio or whether frequency is starting lower at the beginning of audio and gradually increasing.

We have zero knowledge about time in Fourier transform.Now comes the next question if we dont know exact time at which frequency is taken from the audio ,then how Fourier transform giving an output like below

So the answer is Fourier transform is taken for the whole audio .Note that the frequency amplitude diagram generated by the FT does not consider time explicitly. Instead, it represents the frequency content of the signal over the entire duration of the signal, or over the duration of the analyzed frame and average them and gives us.

"On the other hand, we can calculate multiple FTs(Fourier transform)" corresponding to multiple patches of audio, obtained by splitting the original clips at fixed intervals of time. These sets of FT will actually be more informative about the changes happening in the original clip because they represent the local information(In the particular time slot) correctly.

"So this is what STFT is doing." STFT divides a longer audio signal into shorter segments of equal length and then computes the Fourier transform separately on each shorter segment. The below diagram shows you how STFT happens step by step

You can see the above diagram shows you FFT (Fast Fourier Transform) instead of FT(Fourier Transform). Its same as Fourier transform but FFT is fast and efficient method to calculate the Fourier transform.

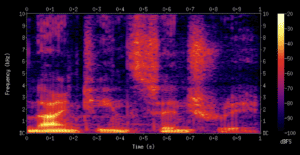

"The STFT computes the Fourier" Transform on small, overlapping time windows of the signal, allowing us to observe the frequency content at different points in time.This enables us to capture frequency over time information which was lacking in Fourier Transform. In other words, the STFT provides a time-frequency representation of the signal.The diagram below shows an example of how STFT looks like.And visualisation of STFT is called Spectrogram

The above diagram shows Time vs Frequency domain but do you wonder why they are coloured. Thats a catch here. They denote the magnitude. So the bright colours like red/yellow denotes high magnitude and dark colour like blue/purple denotes low magnitude. So technically STFT gives Time Frequency Amplitude information.

Understanding Mel-frequency cepstral coefficients (MFCCs)

MFCC stands for Mel Frequency Cepstral Coefficients. Mfcc is nothing but a fancy term to describe how audio can be represented as how humans perceive sound. Still not clear?. Let us dig deeper to know more about mfcc. We need some prerequisites before that.

- Power Spectrum

- Mel scale

- Mel band

1. What is power spectrum?

Lets break the terminology down. What is Spectrum first?.Spectrum is a Frequency vs Magnitude representation of a signal calculated by fourier transform.

What is Power spectrum then?

A power spectrum is similar to a spectrum, but it takes into account the fact that different individual frequency components may have different levels of power or strength. Power is just a measure of how much energy is contained in a particular frequency component of a sound signal.

Then what is spectrogram? Are Spectrum and Spectrogram related?

The answer is spectrogram is a Frequency vs Magnitude vs Time representation of a signal.Let's say you're listening to a recording of a dog barking. The power spectrum of the dog's bark would show you which pitches in the bark are the loudest or most powerful and which bark is softer.So, while a spectrum shows you the energy level of each pitch in a sound, a power spectrum shows you the power or strength of each pitch(ie frequency).

2. Mel scale:

The Mel scale(a non-linear scale) is a way to transform the raw frequencies audio that we hear into a scale that more closely matches the way our ears perceive sound. It does this by compressing the higher frequencies, which we are less sensitive to, and stretching out the lower frequencies, which we are more sensitive to.

3. Mel band:

Mel bands are groups of frequencies that are organized according to the Mel scale. Each band covers a range of frequencies that are close to each other on the Mel scale, and they are used to represent the different parts of the sound that we hear.

So, for example, you might have one Mel band that represents the low bass sounds like bass guitar, Jass guitar etc.., and another that represents the mid-range frequencies of the vocals like Human voice,violin etc.., and a third that represents the higher-pitched sounds of the cymbals in drumkit.

Now how Power spectrum, Mel band, Mel scale and MFCC is related?

Power Spectrum: To calculate MFCC, we first take the power spectrum of the sound.

Mel Bands: Then, we group the frequencies together into "mel bands". These mel bands are like different bins where we put the different frequencies, based on how important they are to us.

Log of Energy: Next, we take the log of the energy in each mel band. This helps us emphasize the differences between the energy levels.

Discrete Cosine Transform (DCT): Then we transform the data using a mathematical process called a Discrete Cosine Transform (DCT). This process reduces the number of dimensions in our data and makes it easier to work with.

The final result of this process is a set of MFCC values.

The advantage of using MFCCs over other spectral analysis techniques like FFT or STFT is that they capture more of the perceptual information of the sound and are less sensitive to background noise and channel distortions.

Now from the above 3 requisites lets see the steps to compute MFCCs:

- Pre-emphasis: The first step is to apply a pre-emphasis( a technique to protect against anticipated noise) filter to the signal, which amplifies the high-frequency components of the signal and reduces the low-frequency components. This helps to improve the signal-to-noise ratio and the overall quality of the feature extraction process.

- Framing: The signal is divided into small frames, typically 20-40 ms in duration, with a 50% overlap between adjacent frames. The frames are usually windowed with a Hamming window to reduce spectral leakage.

- Fourier Transform: A Fourier Transform is applied to each frame of the signal to convert it from the time domain to the frequency domain. This results in a power spectrum for each frame.

- Mel Filterbank: The power spectrum is passed through a Mel filterbank, which is a set of triangular bandpass filters that are spaced on the Mel frequency scale. The output of each filter is the energy within that frequency band. The diagram below shows you an example of Mel filter bank

- Logarithmic Scaling: The logarithm of the filterbank energies is taken to compress the dynamic range of the values and to mimic the non-linear response of the human ear to sound intensity.

- Discrete Cosine Transform (DCT): A DCT is applied to the logarithmic filterbank energies to decorrelate the features and to capture the spectral envelope of the signal. The DCT coefficients are called Mel Frequency Cepstral Coefficients (MFCCs).

Note(Cepstral is nothing but spectrum of a spectrum.Sounds bizarre right?. Cepstrum is defined as taking fourier transform of audio and takes the log of it and again applies inverse fourier transform on it)The output of the MFCC computation process is a set of MFCCs for each frame of the signal. The number of coefficients typically ranges from 10 to 40, depending on the application. The MFCCs are used as features in machine learning models for speech and audio processing tasks such as speaker identification, emotion recognition, and speech recognition.

Advantages of MFCCs over FFT and STFT:

- MFCCs are less sensitive to background noise and channel distortions, which makes them more robust for speech and audio processing tasks.

- They capture more of the perceptual information of the sound and are thus more useful for tasks that require human-like perception of sound.

- They are more compact than the raw spectral features obtained from FFT and STFT, which makes them more suitable for real-time applications.

Conclusion:

This blog has explored fundamental methods in audio signal processing—specifically, Short-Time Fourier Transform (STFT) and Mel Frequency Cepstral Coefficients (MFCC). We've seen how STFT breaks down audio into manageable segments to capture time-frequency information, contrasting with the holistic view provided by Fourier Transform. Moreover, MFCCs have emerged as a powerful tool, leveraging the human auditory system's characteristics to enhance the representation of audio data for classification tasks.

In our journey from Fourier Transform to STFT and MFCCs, we've delved into the nuances of each method, understanding their computational mechanics and practical applications in machine learning, particularly for audio classification on edge devices. Part-1 of this series introduced us to the basics of Fourier Transform, setting the stage for deeper exploration into STFT and MFCCs in Part-2.

To revisit Part-1 of this series and delve deeper into the foundations of audio signal processing, click here.

.avif)

.png)

.png)

.png)

.png)

.png)