Model Deployment Overview - AWS SageMaker vs Azure ML

TL'DR

Model deployment is an integral part of the ML Ops process. ML models need to be ‘productized’ for businesses to enable continual decision-making. In this blog, we are going to cover the steps for deploying the models in production for the Sepsis Early Onset Prediction Use Case. The model was trained and deployed in both the AWS and Azure Cloud Environments. The data source for the model was the pre-processed data used for the Sepsis Early Onset use case. We have structured this blog in such a way that for each step in the process, the corresponding activity in each of the cloud environments will be explained. The steps in the model deployment process are as follows:

AWS SageMaker

AWS SageMaker is a fully managed service that provides developers and data scientists with the ability to build, train, and deploy machine learning models at scale.

It offers a comprehensive set of tools for each step of the machine learning process, including data labeling, model training, hyperparameter optimization, and model hosting.

SageMaker simplifies the process of building and deploying machine learning models by providing pre-built algorithms, managed infrastructure, and integration with popular AWS services.

Azure ML

Azure Machine Learning (Azure ML) is a cloud-based service provided by Microsoft Azure that enables users to build, deploy, and manage machine learning models.

It offers a variety of tools and services for data scientists and developers to develop predictive analytics and machine learning solutions

AWS SageMaker VS Azure ML

1. Data Storage:

AWS Sage Maker

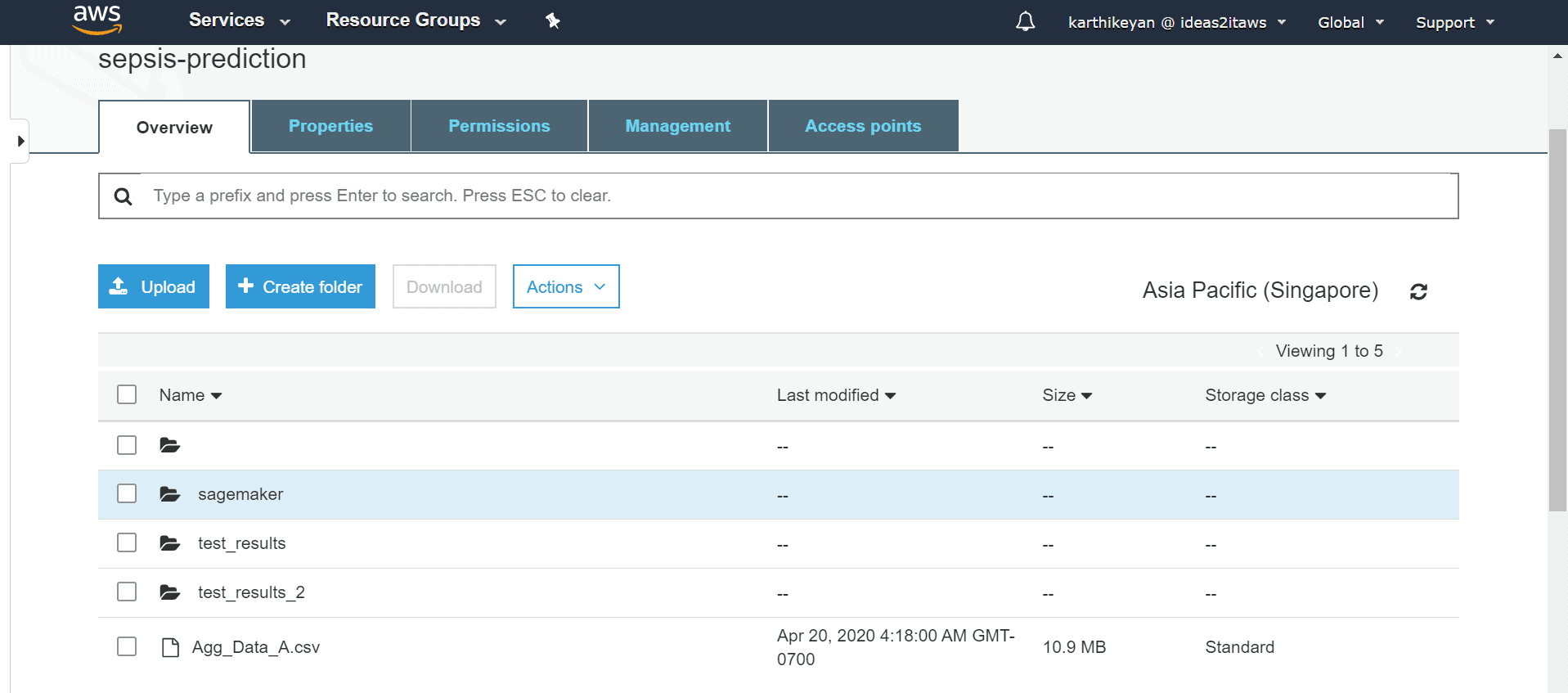

S3 bucket was used as the data storage medium and the input data was uploaded to the bucket.

Azure:

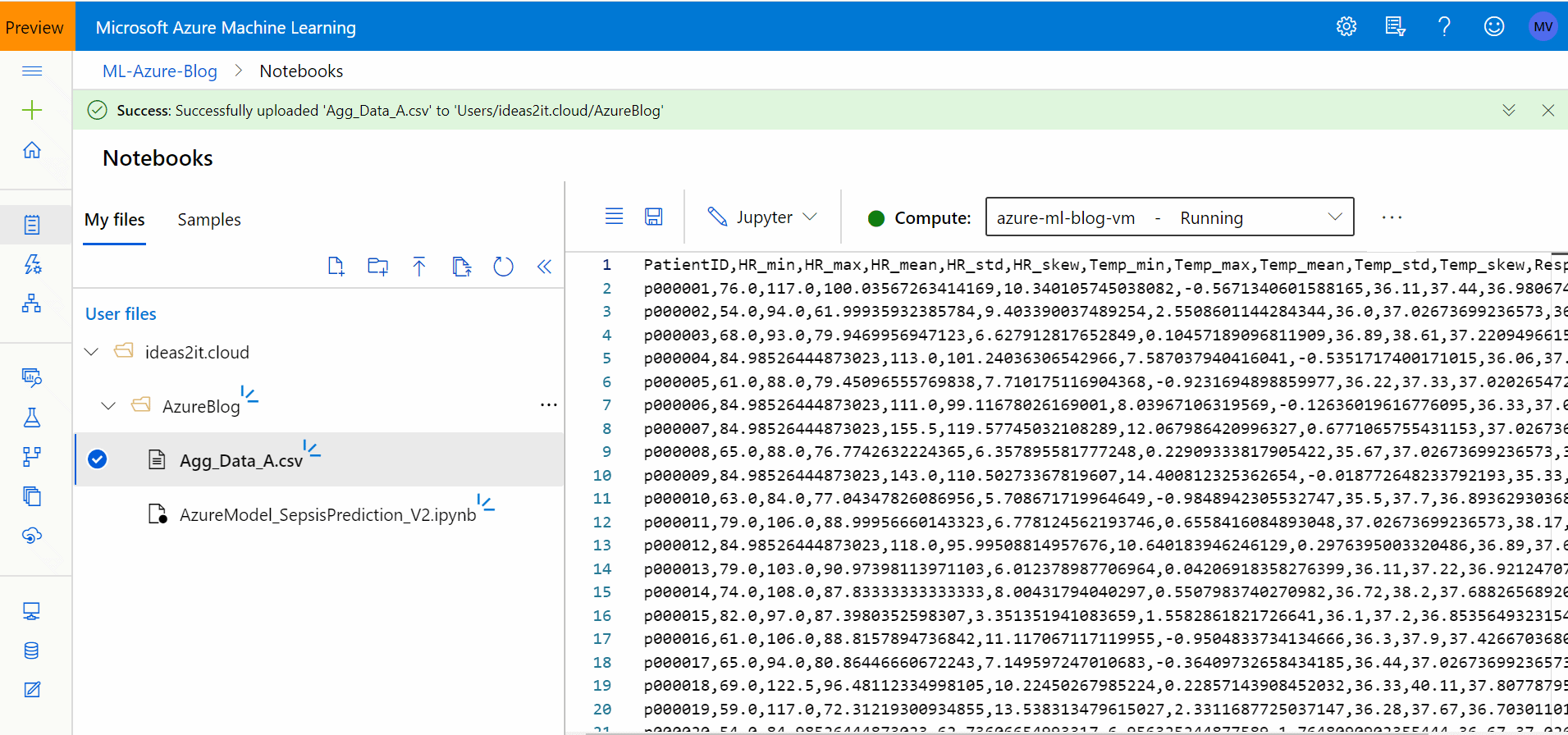

Once an Azure Machine Learning Resource was created, the notebooks section under the Azure ML Services Resource was launched. In this section, the Processed Data was uploaded under the specified folder.

In this stage, the ease of data access was the same for both the cloud environments. In AWS, data was accessed from the S3 Storage, whereas in Azure, the data was accessed from Blob Storage.

2. Instance Initiation:

AWS Sage Maker

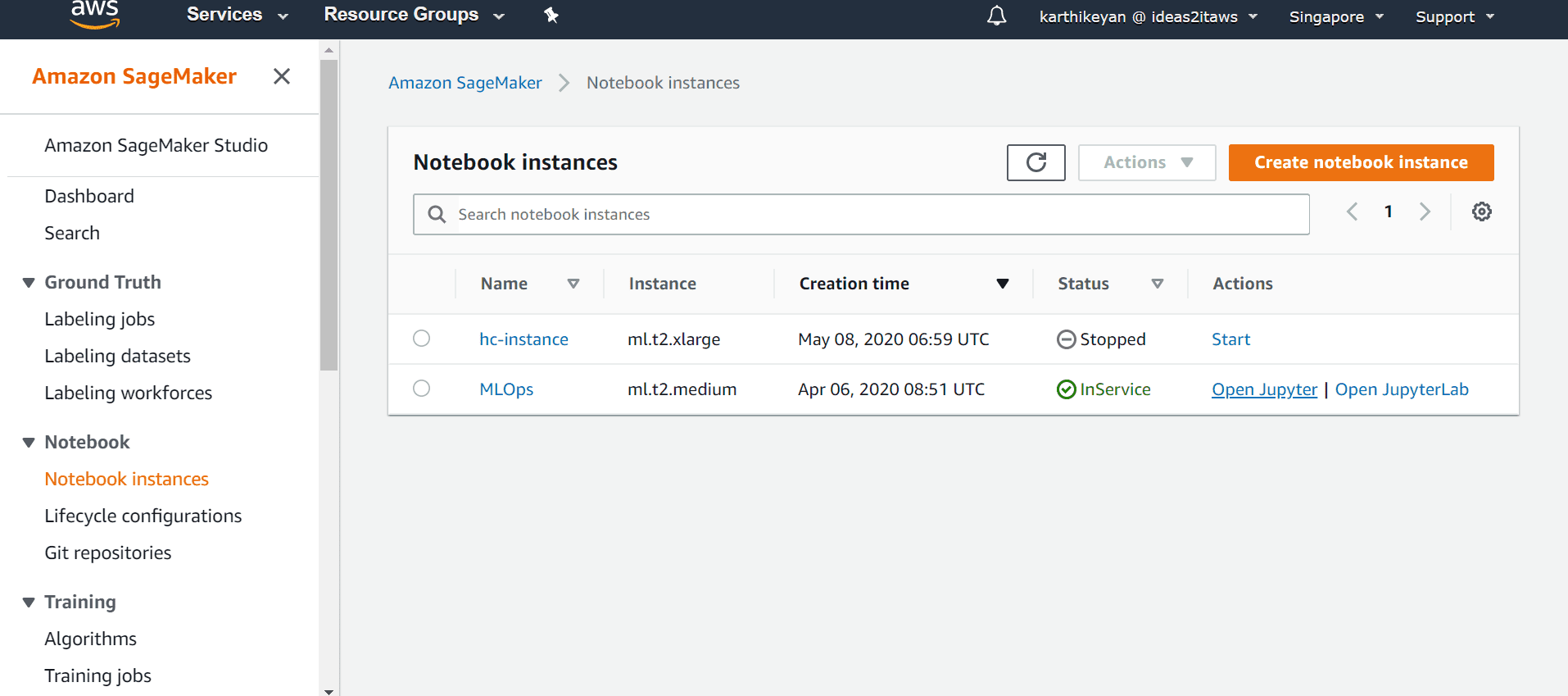

In the AWS Environment, a medium type Jupyter Notebook instance was created and inside of the Jupyter Notebooks - loading the ML specific packages, reading the data from S3 storage and running the ML Models were accomplished.

Azure:

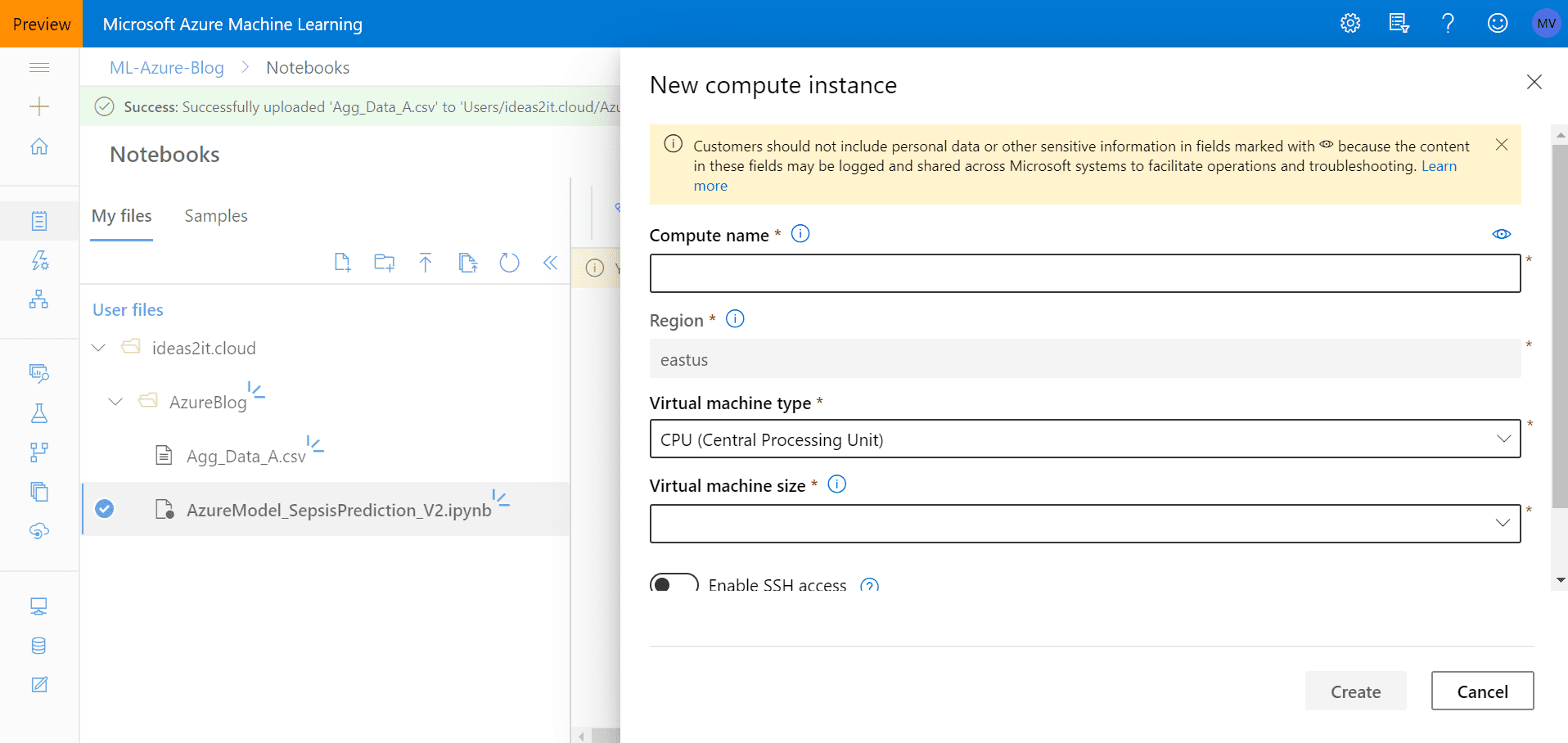

In the Azure Environment, a Dv2 compute instance was created and the created Notebook and Notebook file was saved under the folder in which the processed data was uploaded.

In the Instance Creation stage, it was intuitive to launch the instances in both environments and the usability and ease of creating an instance is the same for both the cloud environments.

3. Model Building:

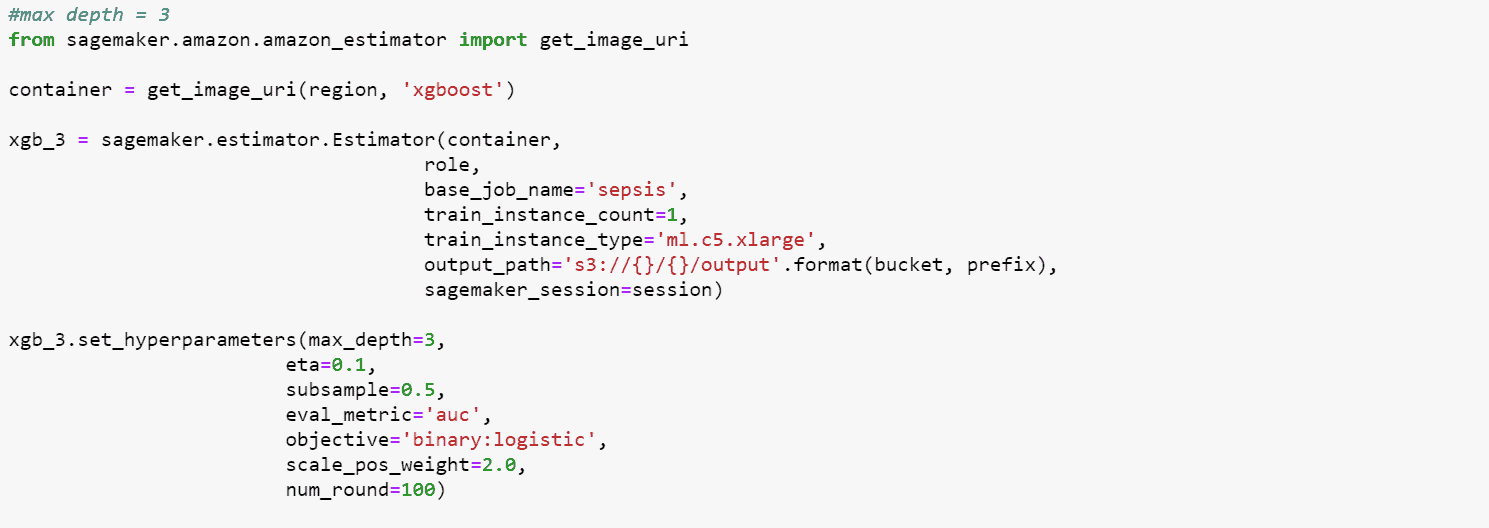

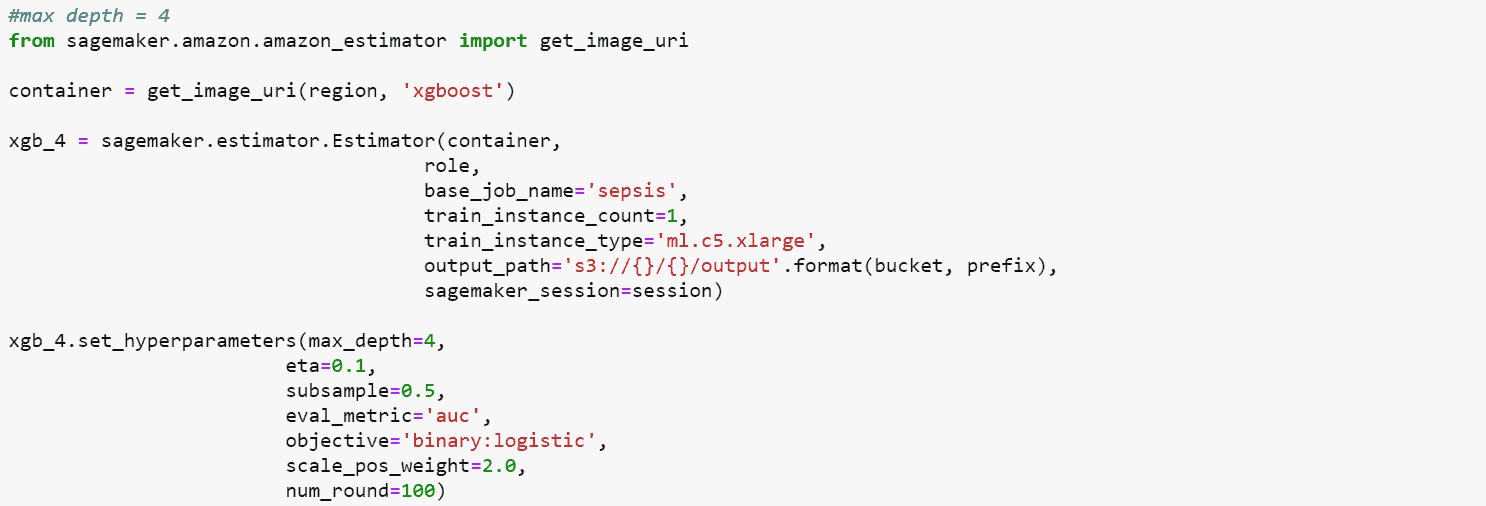

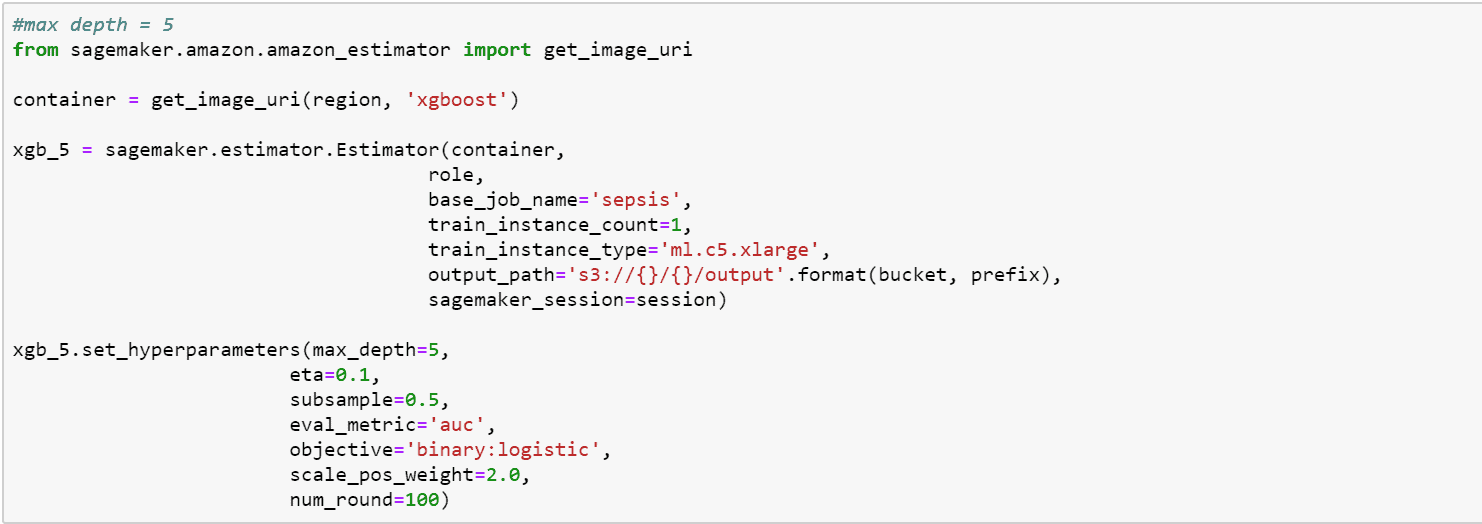

AWS Sage Maker:

SageMaker supports external ML Libraries as well as cloud-native ML Libraries. For this use case, we used the cloud-native ML library. XGBoost Algorithm of Sagemaker was used and its parameters were tuned and 3 variants of the model with depth = 3, 4 and 5 were built.

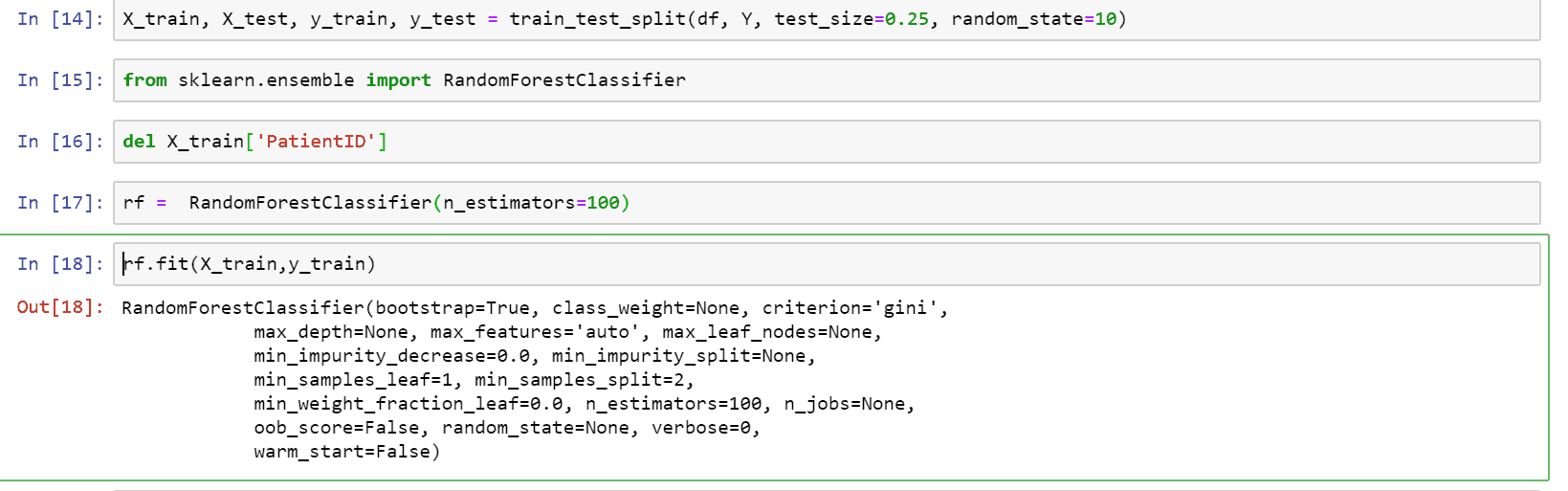

Azure:

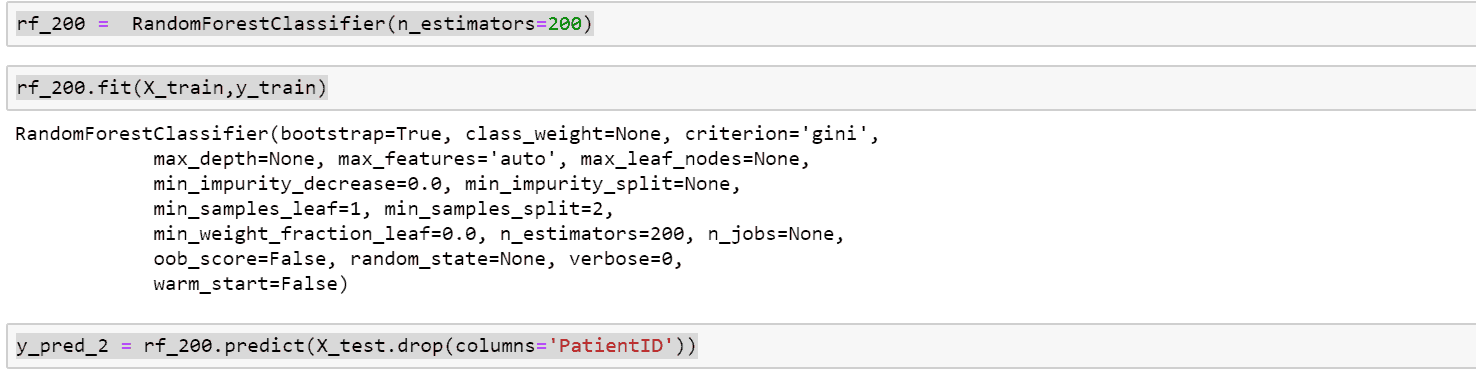

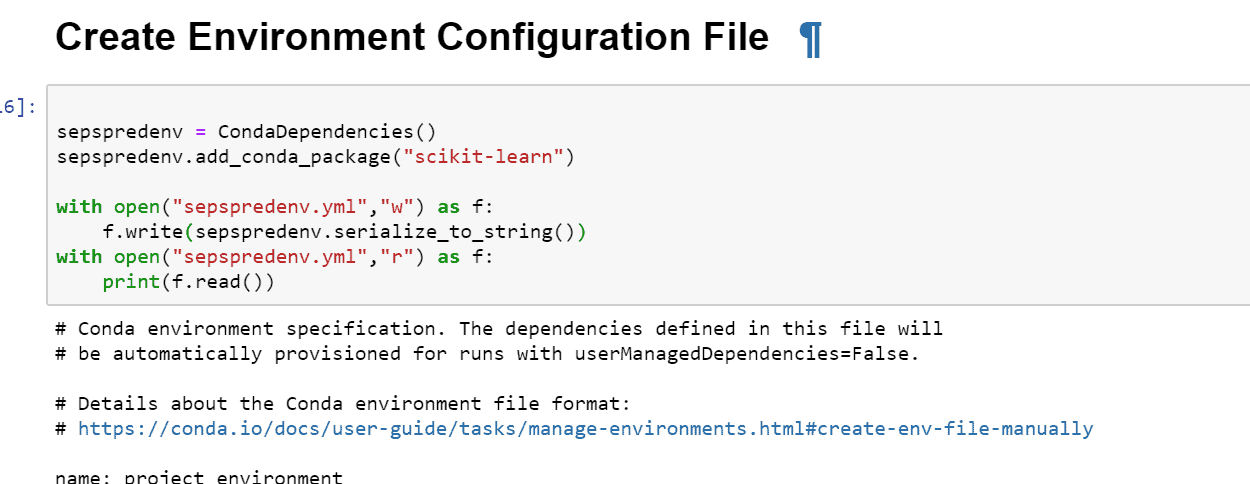

Azure ML supports external ML Libraries like sci-kit learn and for deployment in Azure, we had used the Random Forest Algorithm of Sci-kit learn ML Library with varying model parameters of 100,200 and 300 trees.Random Forest Model with 100 Trees

Random Forest Model with 200 Trees

Random Forest Model with 300 Trees

In the Model Building phase, Azure did a better job in terms of creating ML Models in terms of the complexity of building the models. Sklearn ML Library was made use of, to build the Random Forest Models. In AWS, to make use of Sklearn ML Library, we need to create a Docker Image and then used it. Hence we leveraged AWS’ native XGBoost Algorithm to build the ML Models.

4. Experiments Creation:

AWS Sage Maker:

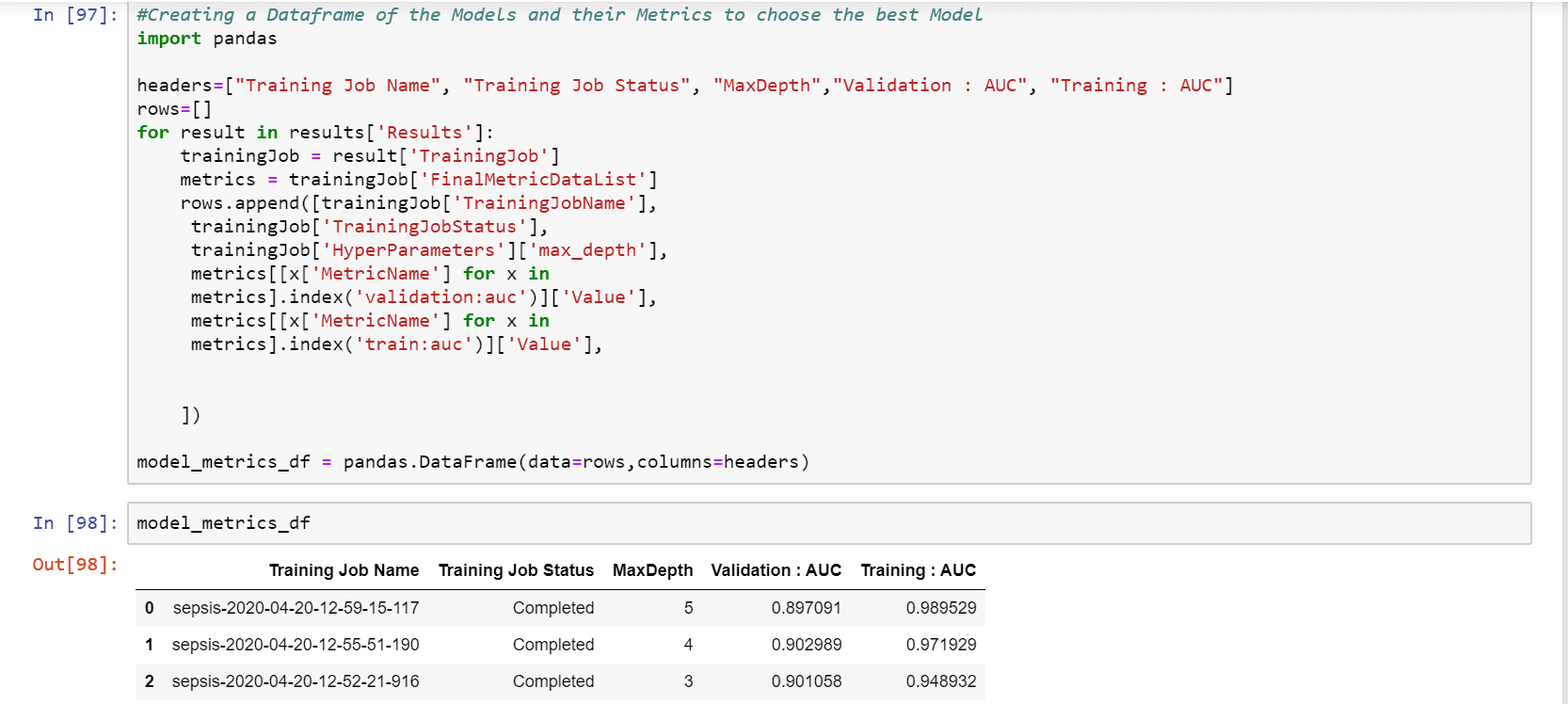

XGBoost Model with varying model parameters were built and for each ML Model that we built, the corresponding validation accuracy and auc value were logged.

Azure:

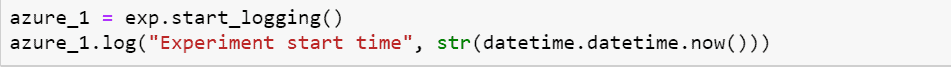

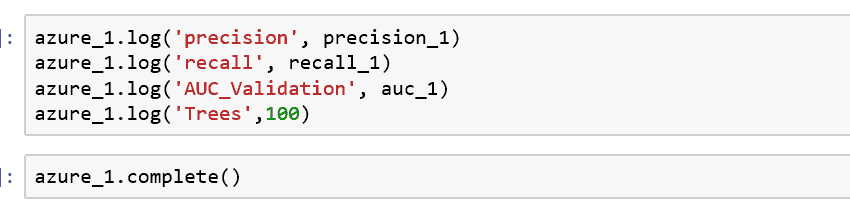

3 experiments were created with each experiment for different Random Forest Models with 100, 200 and 300 trees. For each experiment, the precision, accuracy and auc score were logged. The model evaluation metrics can be customized accordingly.

Both the environments are equally good with Experiments Creation and have APIs that support the creation process.

5. Experiment Evaluation:

AWS Sage Maker:

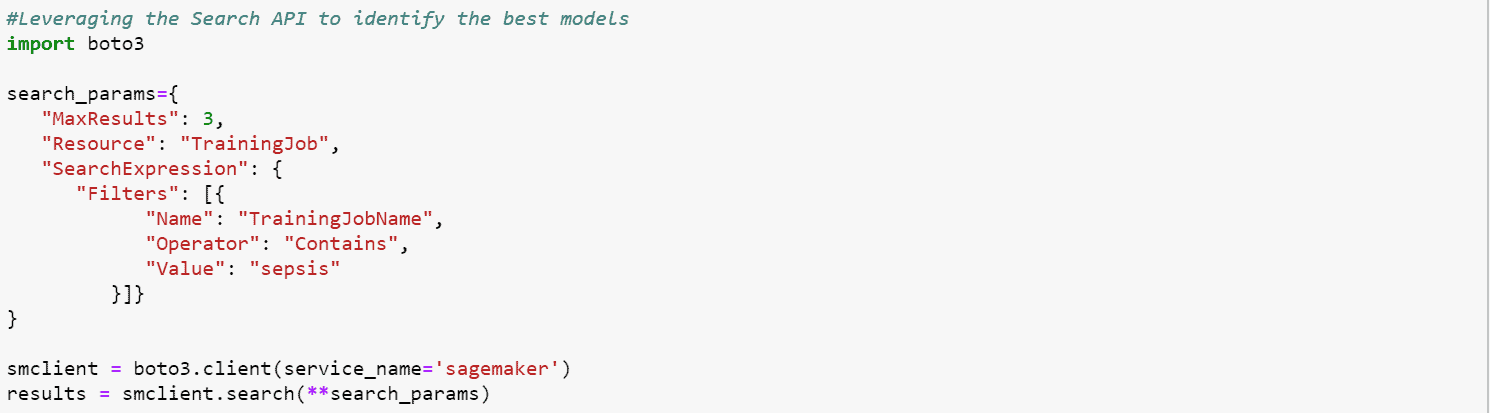

The Search API of SageMaker was used to track the experiments and the model with the highest AUC score - XGBoost with maximum depth size = 5 was chosen for deployment.

Azure:

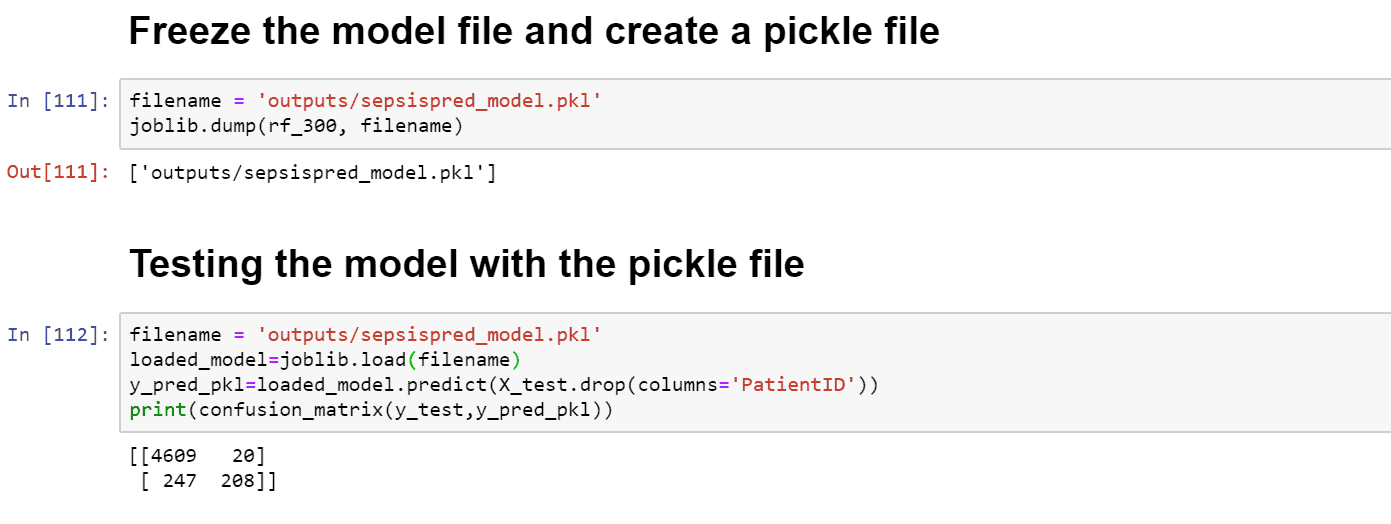

A Data Frame of the experiments’ model metrics was created in the Notebook Instance and the best model was identified. The Random Forest Model with 300 trees gave the highest accuracy and this was used for deployment.

Both the environments are equally good with the Experiments Evaluation process. Sagemaker makes use of the Search API and Azure makes use of the ML Flow API to pick and choose the best experiments. The Model Metrics can be customized in both of these environments.

6. Model Deployment:

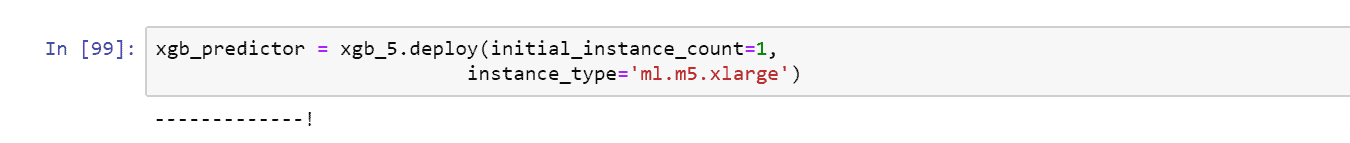

AWS Sage Maker:

An Endpoint was created in the Jupyter Notebook after identifying the best model to be deployed. The Endpoint can be invoked to make a prediction call on an unknown dataset at any time until the endpoint is deleted.

Azure:

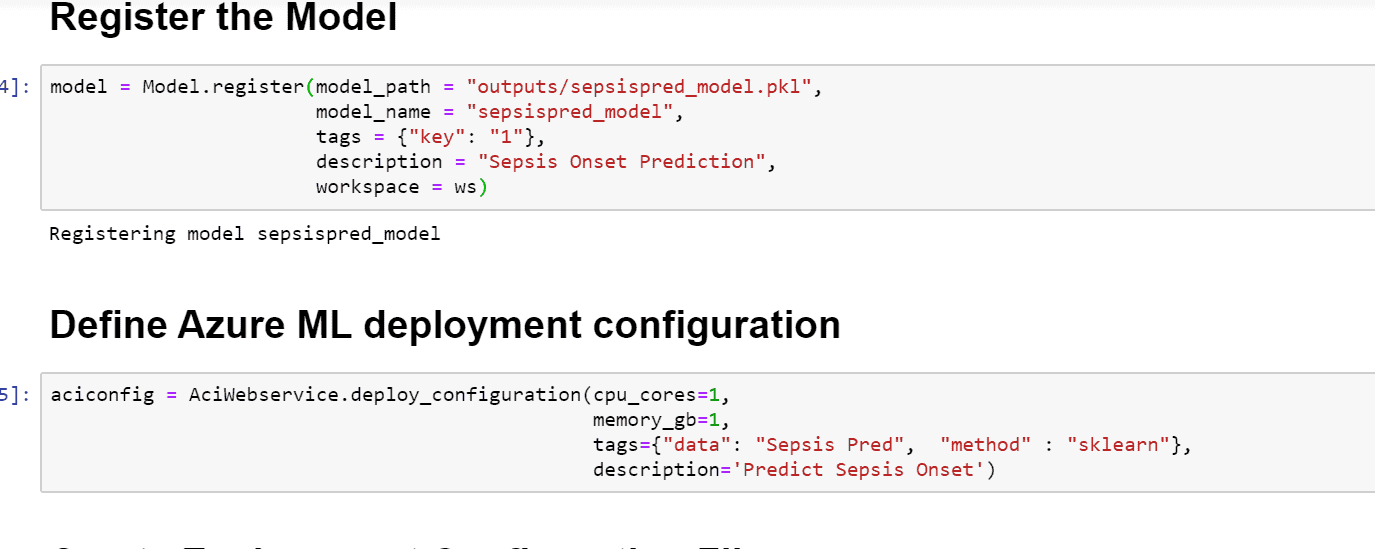

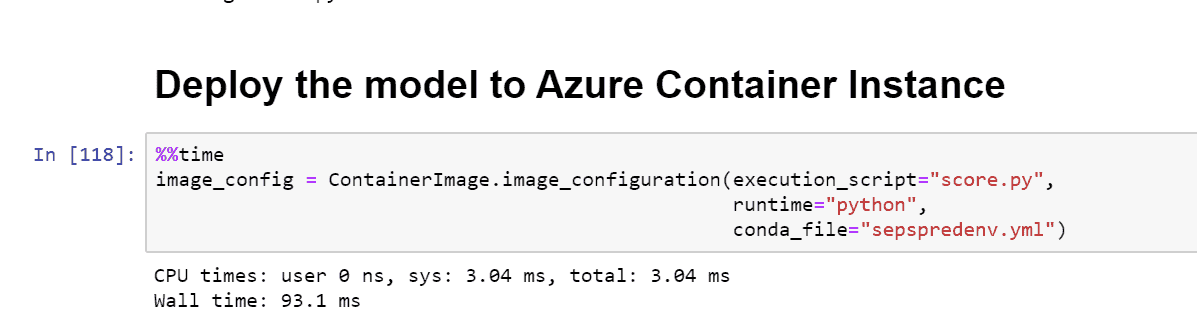

The Random Forest Model was saved as a pickle file in the specified path. The saved model was then registered and packaged as an image inside the azure environment itself. The Model Image was then exposed as a web API to run the on sample test records to test its usage.

To summarize the Model Deployment process in both the Cloud Environments, it was easier to deploy the model in the Azure Environment as native Machine Learning libraries were used, without the need of creating external docker images.

.avif)

.png)

.png)

.png)

.png)

.png)