AI Adoption Frameworks That Scale: Proven Strategies from Healthcare and Beyond

TL'DR

- Most AI initiatives fail not because of technical limitations, but because organizations underestimate the operational, cultural, and regulatory complexity surrounding AI deployment.

- Healthcare, as the most unforgiving environment, reveals what truly resilient AI frameworks must address: strategic alignment, data integrity, governance by design, operationalization discipline, and cultural adaptation.

- Winning in AI requires building systems that are resilient by design, not afterthought.

Executive Summary

This blog offers a blueprint for building AI adoption frameworks that can survive real-world complexity, starting with healthcare and extending to all industries. Key takeaways include:

- Why AI fails: The root causes go beyond technical performance into operational, regulatory, and cultural friction.

- What resilient frameworks require: Strategic alignment, trusted data pipelines, governance architectures, MLOps discipline, and engineered cultural adoption.

- Why healthcare is the ultimate stress test: High stakes, low error tolerance, shifting regulations, and inflexible workflows expose weak frameworks immediately.

- How to operationalize risk: Quantification, prioritization, real-time monitoring, and embedded escalation pathways are mandatory.

- Extending frameworks for GenAI: Structured prompt management, validation layers, IP protections, and continuous human oversight.

- Winning strategies: Organizations that build frameworks engineered for resilience will own the future of AI at scale.

Introduction: Why Most AI Efforts Stall Before They Scale

The myth of AI failure is that it’s a technical problem. That algorithms weren’t accurate enough. That models couldn't generalize.

In reality, most AI initiatives don't fail because of technical shortcomings. They fail because enterprises underestimate everything else:

- The gravitational pull of legacy workflows.

- The operational chaos AI quietly exposes.

- The cultural inertia that slows change to a crawl.

- The regulatory minefields hidden inside deployment pipelines.

Healthcare doesn’t just expose AI weaknesses. It amplifies them under the harshest real-world conditions.

Here, AI isn't just another tool. It makes decisions that touch human lives, and when it fails, the consequences are immediate, visible, and irreversible.

That’s why, despite nearly a decade of AI breakthroughs, fewer than 15% of healthcare organizations have successfully operationalized AI at scale (Bain & Company, 2024).

Recent enterprise studies mirror this trend more broadly: a 2025 report by S&P Global Market Intelligence found that 42% of businesses have scrapped most of their AI initiatives, and that 46% of AI proof-of-concepts are abandoned before reaching production.

And healthcare is a warning.

If AI frameworks can’t survive healthcare’s complexity, volatility, and accountability pressures, they won’t survive anywhere.

Scaling AI about better systems around those models. Systems that are resilient, governable, auditable, and human-centered by design

This blog lays out how to build those systems:

Not through buzzwords, but through the hard, necessary work of architecting AI adoption frameworks that work for healthcare, and for every sector where the cost of getting it wrong is too high.

The Real Problem Is Not Just the Model

When IBM Watson Health promised to revolutionize cancer care with AI, the pitch was impeccable: faster diagnoses, tailored treatment plans, global scalability.

The reality, however, was brutal.

- The models were trained on narrow datasets, missing the diversity of real-world clinical cases.

- The outputs were hard to interpret by oncologists accustomed to nuanced, case-by-case judgments.

- The integration into clinical workflows added friction instead of removing it.

By 2021, IBM had dismantled Watson Health after sinking billions into the effort. This was because AI couldn’t work inside the existing healthcare system.

This is the true pattern of AI failure:

- Models that perform well in controlled environments collapse in operational chaos.

- "Successful" pilots that don't survive contact with real users, real regulations, and real incentives.

- Organizations optimizing for AI demos rather than AI systems.

In every case, what’s missing isn't technical capability. It's a lack of comprehensive frameworks that account for:

- How AI fits (or conflicts) with legacy workflows.

- How human users trust (or resist) AI outputs.

- How systems capture (or miss) regulatory, ethical, and operational risks.

A model might achieve 98% accuracy in the lab. But if it can't handle the 2% chaos of real life, then it’s dead on arrival.

Frameworks are the only way to bridge this gap.

Key Takeaway: Even accurate models fail if the system around them isn't built to absorb operational risk, user behavior, and regulatory complexity.

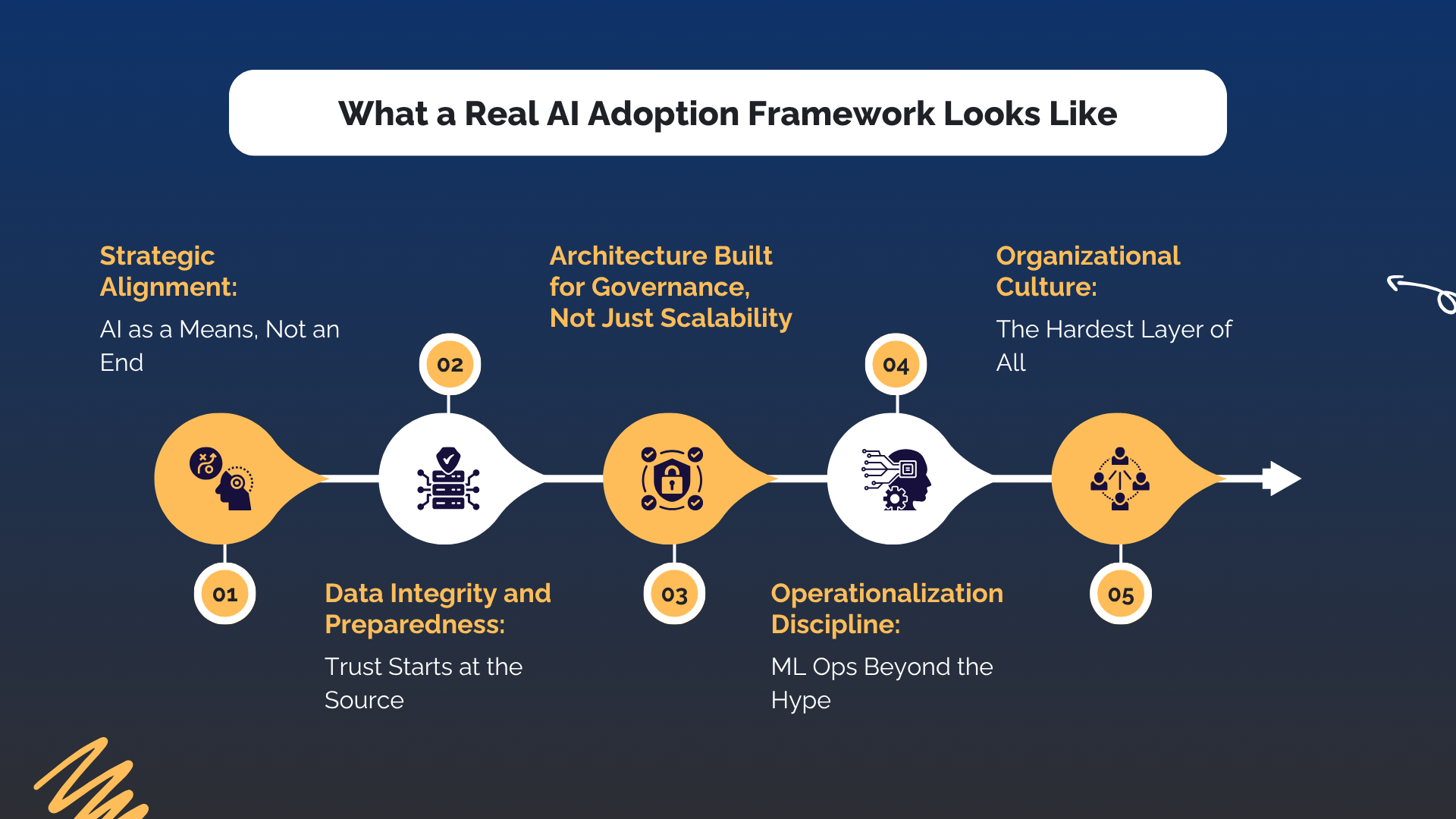

What a Real AI Adoption Framework Looks Like

Real frameworks don't start with technology choices. They start with brutal, honest questions:

- What organizational frictions will kill this AI solution?

- Where will human trust break down?

- Which regulatory tripwires are embedded in this workflow?

From there, a real framework addresses five intertwined layers.

1. Strategic Alignment:

The first failure point in most AI projects is strategic misalignment. Organizations chase AI because it's trendy, not because it directly advances their core mission.

In healthcare, this mistake is fatal. If an AI system can't demonstrably improve patient outcomes, reduce clinical workload, or increase system resilience, it doesn't matter how accurate it is. It will die in pilot purgatory.

Real frameworks tie every AI initiative to high-stakes, measurable enterprise goals and make those goals visible to everyone from the C-suite to the last-mile user.

2. Data Integrity and Preparedness:

AI is only as good as its data. But in healthcare, manufacturing, and BFSI, data is messy, fragmented, and biased in invisible ways.

Frameworks must:

- Validate dataset representativeness across sites, demographics, and systems.

- Audit for hidden biases before model training, not after deployment.

- Build traceable, regulatory-compliant data provenance pipelines.

Without this, AI systems risk systemic, undetectable harm.

3. Architecture Built for Governance

Anyone can deploy a model. Few can govern one at scale.

Real frameworks design architectures that:

- Embed explainability and interpretability into core data flows.

- Enable seamless human oversight at critical decision points.

- Secure personally identifiable information (PII) and regulated data by design, not as a patchwork fix.

If governance is retrofitted, failure is inevitable.

4. Operationalization Discipline:

Deploying AI is a continuous lifecycle:

- Monitoring model drift.

- Managing performance degradation.

- Auditing outcomes against real-world baselines.

- Retraining against evolving datasets and regulations.

Without a living MLOps engine, models die the slow death of irrelevance.

5. Organizational Culture:

Technical success means nothing if frontline users reject the system.

Frameworks must:

- Build AI literacy programs for non-technical stakeholders.

- Create escalation pathways for ethical, operational, and trust issues.

- Treat human acceptance as a core KPI, not a soft metric.

Culture will not magically adapt to AI. Effective frameworks proactively engineer this adaptation.

Healthcare: The Ultimate Stress Test for AI Frameworks

Healthcare shatters fragile frameworks.

Here's why:

- Data Drift is Inevitable: Clinical practices vary drastically between hospitals, departments, even individual physicians. A model trained on Hospital A's records may misfire spectacularly at Hospital B.

Stanford-led Medicine research further highlights this fragility: AI diagnostic systems that demonstrated over 90% accuracy in controlled lab environments dropped to 72% accuracy when applied across real-world clinical settings. (Source)

- Decision Contexts Are Life-or-Death: A minor AI misjudgment in retail leads to an out-of-stock item. In healthcare, it can lead to patient harm. Tolerances for error are brutally low.

- Workflows Are Inflexible: Clinicians operate under extreme time, regulatory, and procedural pressures. If an AI tool adds cognitive overhead instead of reducing it, it will be ignored or resisted no matter how accurate.

- Regulations Are Moving Targets: Compliance today doesn't guarantee compliance tomorrow. GDPR, HIPAA, FDA SaMD guidelines, and new AI regulations create a constantly shifting baseline.

- Ethical and Societal Expectations Are Higher: Patients’ rights, data privacy, fairness, transparency aren't just compliance checkboxes in healthcare. They're existential requirements.

Most industries can tolerate AI growing pains but not healthcare. For more on how AI is transforming clinical environments, read our AI in healthcare overview.

If an AI adoption framework can survive healthcare’s complexity, scrutiny, and volatility, it can survive anywhere.

Healthcare’s unforgiving environment reveals a deeper truth: in high-stakes domains, operationalizing AI risk is existential.

Operationalizing Risk:

Most organizations treat AI risk as an abstract fear, something to be "monitored" after deployment.

That mindset guarantees failure.

Real AI adoption frameworks operationalize risk upfront before the first model is trained, before the first dataset is labeled, before the first prototype is deployed.

Because in industries like healthcare, BFSI, and manufacturing, risk isn't theoretical. According to a World Economic Forum analysis, many AI failures in regulated industries could have been prevented through proactive risk governance frameworks. (Source)

It's a clinical liability, a regulatory violation, and systemic trust collapse.

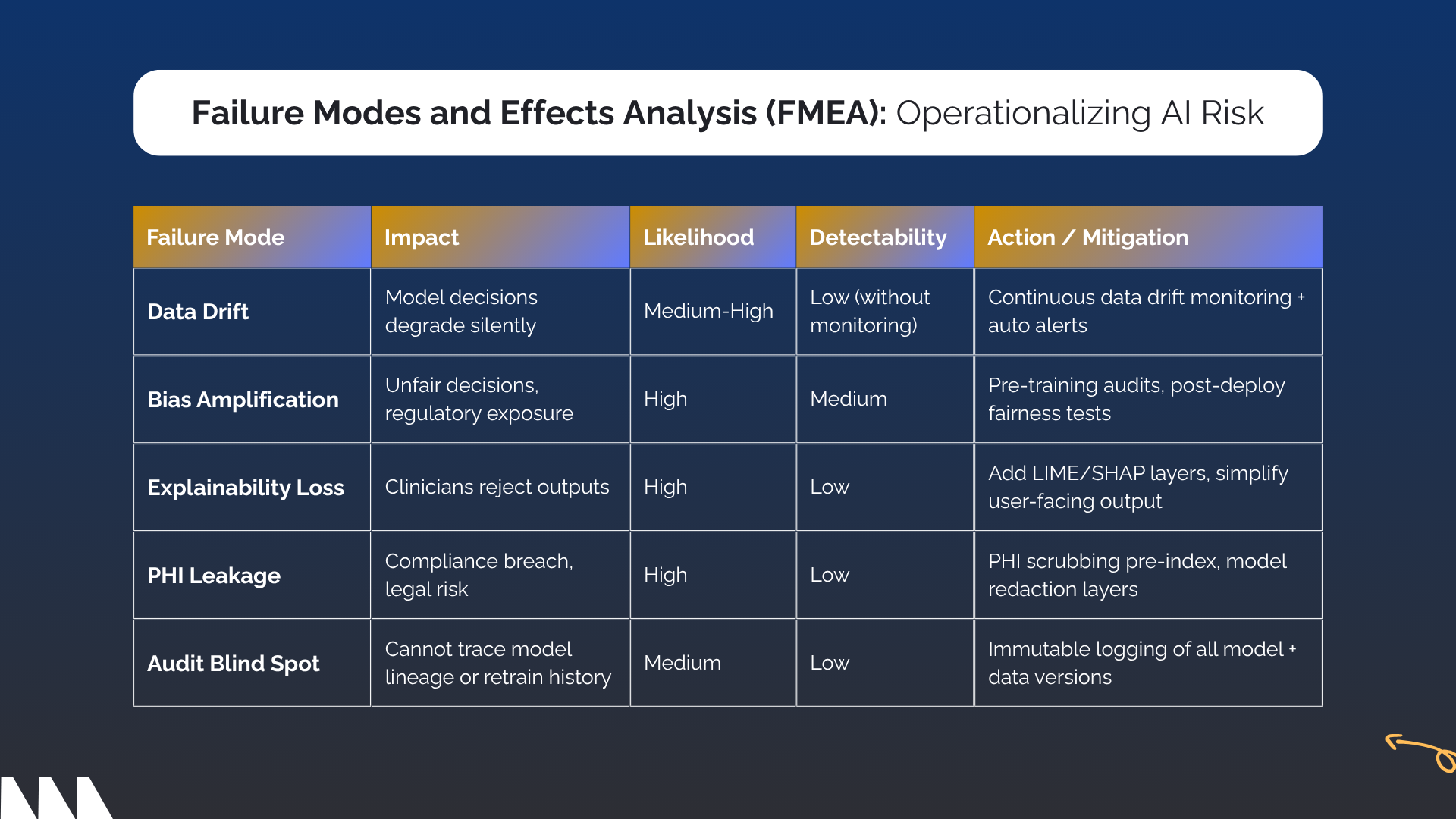

How Risk Quantification Actually Works

At the core of operational AI risk management is Failure Modes and Effects Analysis (FMEA) a methodology adapted from aerospace and critical systems engineering.

In an AI context, FMEA means systematically asking:

- What can go wrong at every stage?

- How severe is the impact?

- How likely is this failure to occur?

- How easy would it be to detect if it does?

Each failure mode is scored, prioritized, and linked to mitigation actions before models reach production.

Failure Modes and Effects Analysis (FMEA): Operationalizing AI Risk

From Quantification to Execution: Embedding Risk into the Framework

Real frameworks restructure workflows to continuously manage them:

- Risk Control Gates: No model moves from dev to prod without passing quantifiable risk thresholds.

- Continuous Monitoring Systems: Drift detection, bias revalidation, explainability degradation tracking running in real-time.

- Human Escalation Loops: If systems flag anomalies, human oversight takes precedence immediately.

- Red-Teaming Exercises: Actively attacking your own models before external attackers or auditors do.

- Pre-Mortems, Not Post-Mortems: For every deployment, assume failure and map how, why, and when it could occur.

Risk operationalization isn't bureaucracy. It's survivability.

In healthcare, it isn't just about staying compliant, it's about protecting lives.

In BFSI, it's about protecting institutions. In manufacturing, it's about protecting mission-critical supply chains.

Building Regulatory Audit Readiness into the AI Framework

AI governance is about external survival.

Healthcare, BFSI, and critical industries operate under an expanding thicket of regulatory regimes: HIPAA, GDPR, FDA SaMD for AI/ML-enabled medical devices, the EU AI Act, state-level privacy laws, and more.

Every model deployed without embedded auditability is a compliance failure waiting to happen.

The New Reality: Audit Readiness by Design

Auditability can’t be retrofitted. Real AI frameworks must:

- Document Every Decision: From data labeling rules to model hyperparameters, from bias testing protocols to retraining thresholds.

- Maintain Immutable Lineage: Who touched the data? Who approved the model? When was it retrained? Immutable, tamper-evident logs are non-negotiable.

- Operationalize Explainability: real explanations, mapped to real user needs (e.g., clinician-facing interpretability, patient-facing transparency).

- Simulate Audit Scenarios: Pre-launch mock audits stress-test documentation, model outputs, bias handling, and data governance practices.

- Trigger Automatic Escalations: If explainability drops, bias indicators rise, or retraining events occur outside approved windows, the system must flag and escalate.

Key Takeaway: Without a clear, structured approach to AI failure modes, enterprises scale fragility instead of intelligence.

Healthcare’s Shifting Standard

The FDA’s forthcoming Good Machine Learning Practices (GMLP) and the evolving SaMD guidelines signal one thing: Auditability will no longer be a "nice to have." It will define which AI solutions survive regulatory scrutiny and which get pulled from the market.

In financial services, the SEC’s interest in AI-driven discrimination risk adds even more urgency.

In this future, the organizations that win won’t be the ones with the flashiest AI demos.

They’ll be the ones that can prove instantly, incontrovertibly, and repeatably that their AI is fair, explainable, secure, and compliant.

Frameworks built without auditability will risk operational shutdown.

As AI frameworks mature, a new frontier emerges: Generative AI, with its own uniquely complex risks.

Generative AI: New Risks, New Framework Extensions

Generative AI (GenAI) isn't just another model type. It's an entirely new risk surface.

Large language models, image generators, and multimodal AI systems introduce failure modes traditional frameworks barely account for:

- Hallucinations: Confidently incorrect outputs that can mislead clinicians, underwriters, or operations teams.

- Intellectual Property Risk: Training on copyrighted data leaks into generated outputs.

- Misinformation at Scale: Incorrect medical advice, fabricated clinical notes, or biased financial recommendations.

- Explainability Black Holes: Outputs that are harder or impossible to fully trace back to deterministic features.

The clinical stakes are already evident: Emerging studies in 2025 found that over 90% of clinicians reported encountering hallucinated outputs from AI models in practice, and 84.7% believed these could negatively impact patient safety. (Source)

Mini-Case:

A leading healthcare provider partnered with Ideas2IT to develop a GenAI-powered document comprehension engine for clinical notes, discharge summaries, and diagnosis reports. By combining PHI redaction, LangChain query routing, FAISS vector storage, and the Vicuna-13b model, the solution cut information retrieval time for RCM teams by 50% speeding up care delivery and reducing administrative burden.

Framework Adaptations for GenAI

To survive GenAI, AI frameworks must extend beyond traditional controls:

- Structured Prompt Management: Governance around what prompts are allowed, logged, and monitored.

- Human-in-the-Loop by Default: No unaudited GenAI outputs reaching end users, especially in critical systems.

- Post-Generation Validation Layers: Independent verification of generated content against source-of-truth databases.

- IP Protection Pipelines: Filters to detect, flag, and quarantine outputs that may violate copyright, data privacy, or compliance standards.

- Bias and Toxicity Stress Testing: Red-teaming GenAI outputs with adversarial prompts to uncover edge-case failures.

Without these extensions, GenAI will simply accelerate organizational risk, producing polished-looking outputs with hidden landmines inside.

For a practical strategy to deploy GenAI safely, explore our Generative AI strategy guide.

The promise of GenAI is massive.

But without fortified frameworks, the liabilities will be even bigger.

Bringing It All Together: What Winning Frameworks Do Differently

Across healthcare, BFSI, and manufacturing, the organizations that will lead in AI adoption aren't the ones with the most models. They're the ones with the most resilient frameworks.

Winning frameworks:

- Align AI relentlessly to high-value, operational outcomes.

- Engineer trust by governing data, models, and decisions from inception.

- Operationalize risk, quantify it, track it, adapt to it.

- Build regulatory auditability into the system's DNA.

- Extend seamlessly to manage GenAI-specific threats and opportunities.

- Treat cultural adaptation as a first-class engineering problem, not an afterthought.

AI will fail if organizations can't build structures to catch the 2% edge cases that destroy trust.

Frameworks are what transform AI from science experiments into competitive advantage.

The Ideas2IT Advantage: Building Frameworks That Endure

At Ideas2IT, we don’t just deliver AI models.

We build the frameworks that make AI sustainable even in the most complex, high-stakes environments like healthcare, BFSI, and manufacturing.

Our approach embeds:

- Strategic alignment: Every AI initiative mapped to mission-critical outcomes.

- Governance-by-design: From data integrity to model explainability to audit readiness.

- Operational resilience: Continuous monitoring, retraining protocols, and risk mitigation engineered into deployment pipelines.

- Generative AI extensions: Fortified controls for hallucination detection, IP risk management, and prompt governance.

- Cultural engineering: AI literacy, human-in-the-loop processes, and stakeholder trust programs from day one.

Whether you're scaling predictive AI, operationalizing GenAI, or navigating emerging AI regulations Ideas2IT builds the adoption frameworks that turn ambition into resilient, real-world impact.

Conclusion:

If you can build an AI adoption framework that survives healthcare's scrutiny, you can survive anywhere.

Because if a framework can handle:

- Clinical drift,

- Regulatory volatility,

- Human life-or-death stakes,

- Societal-level fairness expectations,

then retail, logistics, banking, and manufacturing will be easier by comparison.

Healthcare is where AI frameworks go to be tested. The enterprises that treat it as a proving ground, \ will emerge ready to scale AI sustainably across every domain they touch.

Build frameworks that work, and AI will endure.

.avif)

.png)

.png)

.png)

.png)

.png)