How to Build AI Data Infrastructure That Scales Effectively

TL'DR

According to a 2024 Gartner survey of over 1,200 data management leaders, 63% of organizations either do not have or are unsure if they have the proper data management practices for AI, putting their projects at serious risk.

As Roxane Edjlali, Senior Director Analyst, Gartner, warns:

“Organizations that fail to realize the vast differences between AI-ready data requirements and traditional data management will endanger the success of their AI efforts.”

This pressure is felt by CIOs, CEOs, and tech leaders alike. AI promises to transform business, but many organizations remain trapped trying to fit AI demands onto legacy data infrastructure built for traditional workloads, often with disappointing results.

The reality is clear that scaling AI on an outdated data infrastructure is a recipe for stalled pilots, runaway costs, and missed opportunities. More than 90% of CIOs now say that managing cost is a major barrier to extracting value from AI, with predictions that cost miscalculations could reach 500%-1,000% if leaders don’t rethink their approach.

This blog outlines how organizations can build future-ready, AI data infrastructure to support real-time, reliable, and effective AI systems.

Why Legacy Data Infrastructure Falls Short for Scaling AI

Traditional data platforms were built primarily for static reporting and periodic analysis. AI applications, however, require capabilities that go far beyond these original designs. Here are the key reasons why legacy systems limit AI’s growth:

- Data Inconsistency and Schema Rigidity

Legacy systems often struggle with rigid schemas and inconsistent data formats, leading to data integration and quality challenges. A 2024 survey by Monte Carlo revealed that 68% of data teams lacked complete confidence in their data quality, highlighting the pervasive nature of this issue.

- Poor Data Validation

85% of AI projects fail due to poor or insufficient data, which includes delayed or outdated data that AI models depend on to make timely decisions. Legacy systems validate data in periodic batches, but AI requires continuous data quality monitoring. Without real-time validation, errors can slip through the cracks, affecting AI model performance.

- Lack of Data Lineage and Governance

Data governance ensures that AI models can be trusted and meet regulatory requirements. Legacy systems often lack comprehensive tracking of how data is processed and used, which can lead to compliance issues and hinder the effectiveness of AI systems. The shortage of data technology across organizations, cited by 43% in the CDO Insights survey, further complicates the ability to manage and govern data in a way that supports AI at scale.

To overcome these challenges, organizations must build an AI data infrastructure, a purpose-built system that handles high-volume, real-time data ingestion, ensures data quality, and supports complex transformations required by AI workloads. This infrastructure forms the backbone for reliable AI operations.

Next, let’s explore the core components essential for creating AI-optimized data infrastructure.

Also Read: From Data Strategy to Execution: Rethinking Data Services

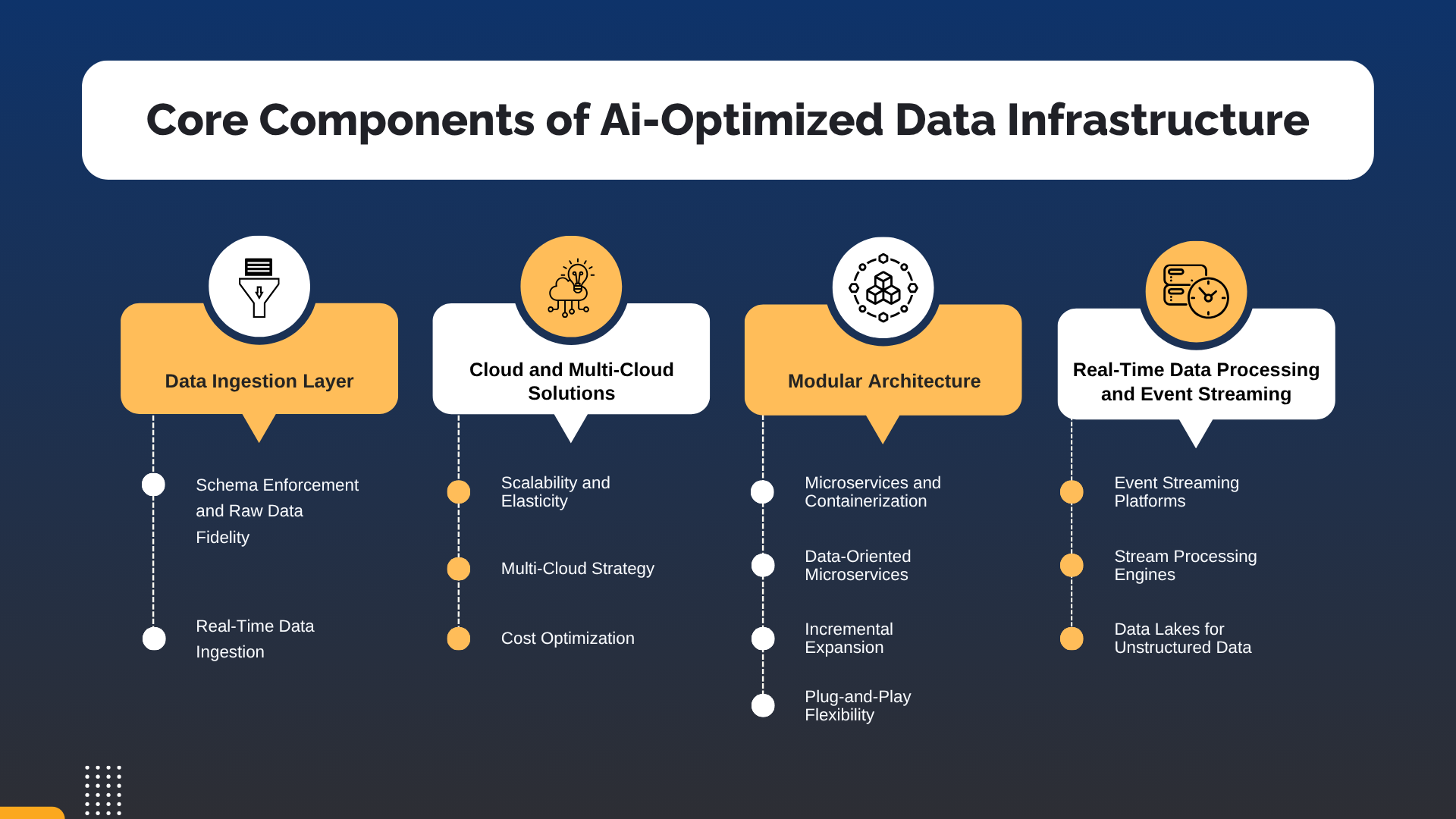

Core Components of AI-Optimized Data Infrastructure

The shift to AI-centric data infrastructure involves integrating advanced components that not only support data collection and processing but also ensure data quality, expandability, and real-time responsiveness. Here’s a breakdown of the essential components necessary for AI-optimized data infrastructure.

1. Data Ingestion Layer

For AI systems to function effectively, data ingestion must be structured to handle high-velocity data while maintaining data integrity.

- Schema Enforcement and Raw Data Fidelity

According to Gartner, 60% of AI projects will be abandoned by 2026 due to poor data quality, highlighting the need for strong data structuring early on. Enforcing data schemas during ingestion prevents structural inconsistencies that can compromise model training. Implementing schema validation frameworks such as Great Expectations and AWS Glue ensures data is properly formatted, reducing errors downstream.

- Real-Time Data Ingestion

Traditional BI pipelines rely heavily on batch processing, but AI requires real-time data. Platforms like Apache Kafka, Amazon Kinesis, and Apache Flink facilitate rapid data streaming, reducing latency and enabling AI systems to respond to live data events effectively.

2. Cloud and Multi-Cloud Solutions

Scalability is fundamental to AI operations, and cloud infrastructure offers the elasticity to handle fluctuating data volumes while optimizing costs.

- Scalability and Elasticity

Worldwide end-user spending on public cloud services is projected to reach $723 billion in 2025, up from $595.7 billion in 2024. This highlights the growing reliance on cloud solutions to support AI’s expansion and flexible resource demands.

- Multi-Cloud Strategy

Distributing workloads across multiple cloud platforms mitigates the risk of vendor lock-in and enhances data resilience. For instance, a financial institution might use Azure for regulatory-compliant data storage and AWS for high-performance AI model training. This approach balances security with computational power, optimizing both cost and resource allocation.

- Cost Optimization

Cloud providers' pay-as-you-go pricing models minimize upfront costs and allow organizations to scale based on demand. This flexibility is critical for AI workloads, where data processing and storage needs fluctuate significantly.

3. Modular Architecture

Modular data architecture supports AI workloads by decoupling data services, enabling independent scaling and rapid integration of new technologies.

- Microservices and Containerization

Organizations can isolate services such as data ingestion, processing, and feature engineering by containerizing data components using tools like Docker and Kubernetes. This approach minimizes disruptions during updates and allows for targeted scaling.

- Data-Oriented Microservices

Organizations are increasingly adopting data-centric microservices, where each service is dedicated to a specific data function (e.g., streaming, validation, transformation).

- Incremental Expansion

Instead of building a comprehensive infrastructure upfront, starting with a Minimal Viable Infrastructure (MVI) allows organizations to introduce advanced features as data needs to grow. For instance, implementing basic ETL processes initially, followed by advanced data processing tools like Apache Airflow and dbt as data complexity increases.

- Plug-and-Play Flexibility

Modular systems facilitate easy integration of new AI tools and data sources, enabling organizations to rapidly adapt to emerging technologies or business requirements without costly overhauls.

4. Real-Time Data Processing and Event Streaming

The ability to process data in real time is critical to maintaining operational relevance for AI systems that rely on live data.

- Event Streaming Platforms

Implementing platforms such as Apache Kafka and Amazon Kinesis enables the ingestion and processing of streaming data, supporting applications like fraud detection and predictive maintenance.

- Stream Processing Engines

Tools like Apache Flink and Apache Storm allow for real-time analytics by processing data in motion, reducing latency and improving decision-making speed.

- Data Lakes for Unstructured Data

AI models often require unstructured data like text, images, and sensor readings. Data lakes, built using AWS S3, Azure Data Lake, or Hadoop, provide flexible storage for diverse data types, ensuring that AI models can access comprehensive datasets.

With the right foundational components in place, the next step is integrating the specific technologies and tools to support AI’s operational needs. Here’s a closer look at how certain technologies fit into the AI-optimized data infrastructure.

Core Technologies and Tools for AI-Optimized Data Infrastructure

Organizations must integrate specific tools and technologies that address data complexity, support advanced analytics, and facilitate real-time processing to scale AI. The following components are essential for a strong AI-optimized data infrastructure.

1. Data Lakes vs. Data Warehouses: Hybrid Architectures

Traditional data warehouses are designed for structured data and predefined queries, while data lakes store unstructured and semi-structured data. As AI models increasingly rely on diverse data formats, combining both architectures in a hybrid lakehouse structure becomes critical.

To better understand how these architectures compare and complement each other, here’s a breakdown of their key features and use cases.

Why Hybrid?

- Scalability: Data lakes handle raw, unstructured data at lower storage costs, while warehouses optimize for structured data queries.

- Governance: Lakehouses integrate governance frameworks with metadata layers, ensuring data lineage and regulatory compliance.

- Performance: Unified data platforms reduce latency between AI training and analytics workflows, preventing data silos.

2. Feature Stores

Feature stores serve as centralized repositories for preprocessed data features, ensuring data consistency across training and inference. They optimize the AI pipeline by maintaining a single source of truth for data features.

Key Components:

- Offline Store: Stores historical feature data for model training and batch inference.

- Example: AWS SageMaker Feature Store integrates with S3 to handle large datasets for training ML models.

- Example: AWS SageMaker Feature Store integrates with S3 to handle large datasets for training ML models.

- Online Store: Provides low-latency access to feature vectors for real-time predictions.

- Example: In e-commerce, online stores can instantly retrieve customer profiles to enhance personalized recommendations.

3. Automated Data Pipelines

Automation is critical for managing data flows across AI infrastructures. Automated pipelines reduce manual effort, optimize resource use, and prevent slowdowns.

Key Components:

- Event-Driven Architectures: Trigger real-time data processing based on specific events, such as transaction monitoring or fraud detection.

- Example: Apache Airflow enables dynamic scheduling of data pipelines, optimizing resource usage, and preventing redundant data transfers.

- Cloud Integration: Automated pipelines connect seamlessly with cloud platforms like AWS, GCP, and Azure, facilitating hybrid and multi-cloud workflows.

4. Data Quality Assurance

Implementing a strong data quality framework mitigates data inconsistencies, prevents model drift, and maintains compliance.

Core Components:

- Metadata Management: Tools like Apache Atlas track data lineage, allowing teams to trace data transformations and identify discrepancies that could impact model performance.

- Anomaly Detection: Continuous monitoring tools detect data drift in real-time, preventing outdated or corrupted data from influencing AI predictions.

- Schema Validation: Implementing schema-on-read in data lakes verifies data formats before they enter the pipeline, minimizing the risk of data mismatches.

With the proper infrastructure and technologies in place, the next priority is ensuring AI pipelines are both effective and compliant. This requires strong data governance to uphold regulatory standards, maintain data integrity, and promote transparency throughout AI operations.

Data Governance and Compliance for AI Pipelines

Data governance and compliance are critical to ensuring data integrity, transparency, and ethical use in AI pipelines. A well-managed data pipeline mitigates the risks of breaches, bias, and regulatory violations that can undermine AI projects and damage organizational reputation.

Here’s how to implement effective data governance and maintain regulatory compliance across AI data pipelines.

1. AI Governance Frameworks

AI governance frameworks ensure that data handling aligns with both internal policies and external regulations, managing data from collection to model deployment. Gartner forecasts that by 2027, over 40% of AI-related data breaches will result from inadequate governance, emphasizing the need for strong frameworks to mitigate compliance and security risks.

Key Components of AI Governance Frameworks:

- Data Ownership: Define clear ownership of data assets, ensuring accountability and traceability across all datasets. Assign data stewards to manage specific data domains.

- Compliance Monitoring: Continuously monitor data access and usage to comply with regulations like GDPR and CCPA. Tools like Apache Ranger and AWS Lake Formation help enforce compliance and secure data access.

- Audit Trails and Lineage Tracking: Use data lineage tools to track how data flows across AI pipelines. This transparency is critical for regulatory compliance, particularly in high-scrutiny sectors like finance and healthcare.

2. Transparency and Explainability

AI systems that operate as opaque "black boxes" pose significant risks, particularly in regulated sectors. Enhancing transparency and explainability is a regulatory requirement and a strategic imperative to build stakeholder trust and reduce adoption risks.

A McKinsey survey found that orgazations identify explainability as a key risk when adopting generative AI, yet only 17% are actively working to address it. This highlights the growing need for organizations to prioritize model transparency.

Strategies for Implementing Explainability:

- Model Interpretability & Explainability Tools: Tools like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-Agnostic Explanations) help deconstruct AI model predictions, offering insights into which data features most influenced specific outcomes. This is crucial for compliance in sectors such as finance, where AI models are used for credit scoring or fraud detection.

- Data Provenance and Metadata Management: Maintain a clear record of data sources, transformations, and outputs with tools like Collibra and Alation, ensuring transparency and minimizing bias.

- Version Control: Use systems like Delta Lake to track data changes, ensuring consistency between training and production data.

- Compliance-Driven Reporting: Generate detailed audit reports that outline data usage, model outputs, and decision-making processes, demonstrating regulatory alignment.

3. Continuous Monitoring

Continuous monitoring detects anomalies, data drift, and model degradation in real time, ensuring that issues are identified before they impact AI performance. Proactive monitoring helps maintain data quality and model reliability over time.

Key Monitoring Components:

- Data Quality Monitoring: Real-time validation tools help detect schema mismatches, missing data, and other inconsistencies that can affect AI model performance.

- Model Performance Tracking: Platforms like DataRobot or Arize AI track model accuracy, latency, and drift, ensuring AI systems perform as expected.

- Automated Alerts: Set up alerts to notify teams of data pipeline failures, performance issues, or feature drift, allowing quick remediation.

4. Proactive Monitoring: Tools and Technologies

Maintaining data integrity in AI systems requires strong monitoring tools capable of managing complex data environments and AI workloads. The share of businesses abandoning their AI projects due to data quality issues, including undetected anomalies, has risen sharply, from 17% in 2024 to 42% in 2025, underscoring the importance of proactive monitoring.

Essential Monitoring Tools:

- Grafana and Prometheus: Track real-time data flow metrics, such as data ingestion rates, latency, and pipeline errors.

- AWS CloudWatch: Monitor cloud infrastructure performance, including storage utilization, data processing rates, and API response times.

- Monte Carlo and Bigeye: Implement anomaly detection systems that identify unexpected data patterns, preventing corrupted data from reaching AI models.

Even with the right strategy and tools, the path to AI readiness is fraught with potential pitfalls. Recognizing and addressing these challenges early on is crucial for avoiding delays, budget overruns, and performance slowdowns during your transition to an AI-driven infrastructure.

Common Pitfalls in Transitioning to AI-Ready Data Infrastructure

Transitioning to an AI-ready data infrastructure is a complex process that many organizations underestimate. Several recurring pitfalls can derail these efforts, leading to project delays, cost overruns, and underwhelming results. Below are the most common challenges.

1. Data Silos and Fragmentation

Legacy BI systems store data in separate systems, creating silos that prevent AI models from accessing complete and integrated datasets. This limits AI effectiveness and accuracy.

Solution:

- Centralize Data: To create a unified data repository, migrate to cloud-based storage solutions like AWS S3 or Google Cloud Storage.

- Data Integration: Implement advanced integration platforms to synchronize data across sources automatically.

2. Inconsistent and Poor-Quality Data

AI requires high-quality, validated data for practical model training. Poor data quality, including inconsistencies, outdated records, or missing values, is a significant hurdle.

Solution:

- Invest in Data Cleaning: Implement automated data cleansing systems that ensure high-quality data enters the AI workflow and provide automated data preparation tools that integrate seamlessly with data lakes.

- Implement Real-Time Validation: Use platforms like Apache Kafka for real-time data validation, ensuring that only reliable data is used for AI processing.

3. Scalability Limitations

Legacy BI systems weren’t built for the scale and performance AI workloads demand. Traditional infrastructure struggles to support AI's massive data and computing requirements.

Solution:

- Cloud Migration: Migrate legacy systems to cloud-based platforms (AWS, Azure, GCP) that offer reliable infrastructure capable of handling large AI workloads. These platforms provide the necessary compute power and storage to support AI models without compromising performance.

- Utilize Cloud-Native AI Tools: Cloud-native solutions like Google BigQuery can handle the real-time processing of structured and unstructured data.

4. Data Timeliness and Real-Time Processing

Legacy BI systems rely on batch processing, leading to delays in data delivery, which is unsuitable for AI applications like fraud detection.

Solution:

- Adopt Real-Time Data Processing: Implement event-driven architectures with tools like Apache Kafka or Amazon Kinesis to process data in real time.

- Modernize Pipelines: Use orchestration tools like Apache Airflow to streamline data flow and ensure timely delivery to AI models.

5. Integration Complexity

Integrating AI tools with legacy BI infrastructure is rarely straightforward. Legacy systems may not support the necessary APIs or data models to connect with modern AI tools, leading to complex and costly integration efforts.

Solution:

- Modernize ETL/ELT Processes: Upgrade traditional pipelines to handle the dynamic nature of AI data using tools like Fivetran or Talend.

- API-First Approach: Adopt an API-first strategy to ensure smooth integration, enabling easy connections between legacy BI systems and new AI tools.

6. Data Governance and Compliance

AI systems process sensitive data, which increases the risk of compliance breaches. Legacy BI systems may not have the governance frameworks necessary to secure AI data.

Solution:

- Implement Governance Frameworks: Use tools like Apache Atlas or Collibra to track data lineage and ensure compliance with regulations like GDPR and CCPA.

- Security and Compliance by Design: Ensure that AI data pipelines are built with security and compliance in mind. Utilize cloud-native security tools to protect sensitive data and maintain compliance with regulations.

7. Cultural Resistance and Change Management

Teams accustomed to traditional BI workflows may resist the AI infrastructure transition, slowing adoption.

Solution:

- Implement Change Management: Develop a clear communication and training plan to guide employees through the transition, emphasizing the value of AI.

- Executive Buy-In: Ensure senior leadership is actively involved in driving the transition and aligning AI initiatives with business goals.

8. Talent Shortages and Skill Gaps

AI initiatives require specialized skills in data engineering, machine learning, and MLOps. Without the right talent, organizations struggle to build or maintain AI-ready infrastructures.

Solution:

- Upskill Internal Teams: Invest in training programs to develop in-house expertise in AI, data science, and cloud technologies.

- Targeted Recruitment: Focus on recruiting professionals with experience in AI infrastructure to build reliable, high-performance systems.

After understanding the potential challenges, let’s look at how to avoid them. A successful transition to AI requires a structured, strategic approach. Organizations can reduce risks and enhance their AI capabilities by following the key steps mentioned below.

Also Read: Generative AI Strategy: Key Blueprint for Business Success

Key Steps to Build AI-Ready Data Infrastructure

As organizations transition from traditional BI systems to AI-ready data infrastructures, it’s essential to follow a structured approach to ensure expandability, flexibility, and long-term success.

Below is a comprehensive checklist for transitioning to an AI-optimized data infrastructure, ensuring you avoid common pitfalls and effectively scale your AI initiatives.

- Have You Started with a Minimal Viable Infrastructure (MVI)?

- Identify immediate data storage, processing, and analytics needs.

- Opt for cloud storage (AWS S3, Google Cloud, Azure Blob) for expandability.

- Avoid complex infrastructure in the early stages for quick deployment.

- Is Your Infrastructure Modular for Scalability?

- Use microservices/serverless architecture for independent scaling.

- Design flexible data pipelines for easy AI tool integration.

- Target component-specific scaling to avoid system-wide reworks.

- Have You Automated Data Pipelines from the Start?

- Automate data ingestion, cleaning, and transformation for efficiency.

- Monitor resource usage with tools like Prometheus and Grafana.

- Implement cost control with real-time monitoring and alerts.

- Are You Implementing Advanced Data Engineering Practices?

- Ensure automated data quality checks and real-time validation.

- Use parallel processing frameworks (e.g., Apache Spark) for large datasets.

- Does Your Infrastructure Support Horizontal Scaling?

- Enable horizontal scaling by adding nodes/resources, not just larger machines.

- Use distributed systems for optimal performance as data grows.

- Are You Using Cloud-Native Platforms for Flexibility?

- Utilize cloud-native platforms for modular, cost-effective resources.

- Adopt pay-as-you-go models to accommodate evolving AI needs.

- Are You Using Feature Stores for Consistency Across Models?

- Centralize offline/online feature stores for consistent data.

- Enable feature reuse across teams to avoid redundant engineering.

- Integrate with data lakes/warehouses for seamless data flow.

- Have You Established a Strong Data Governance and Compliance Framework?

- Assign ownership for data quality, privacy, and security.

- Ensure compliance with regulations (GDPR, CCPA) using governance tools.

- Are You Addressing Talent and Skills Gaps?

- Upskill internal teams on AI infrastructure tools and technologies.

- Collaborate with external experts or consultants for knowledge support.

- Are You Implementing a Phased and Pilot-Driven Approach?

- Start with pilot projects to test the infrastructure and refine it.

- Iterate based on feedback for continuous improvement.

- Scale gradually, expanding infrastructure as needed.

By following this checklist, organizations can ensure a smooth transition to an AI-ready data infrastructure, optimizing for growth capacity and long-term success. However, implementing these practices requires expertise and experience. Partnering with a trusted AI consultant like Ideas2IT can help.

Also Read: AI Adoption Frameworks That Scale: Proven Strategies from Healthcare and Beyond

Build the Right Data Infrastructure for Scalable AI with Ideas2IT

At Ideas2IT, we specialize in Data Science Consulting & Advanced AI Services to build reliable, high-performance data infrastructures specifically designed for AI initiatives. With a focus on AI-powered engineering, we provide end-to-end solutions that ensure your AI systems are resilient, operational, and aligned with business goals.

- Comprehensive Data Engineering: We transform unstructured data into structured repositories, covering strategy, data ingestion, modeling, governance, and scalability to support AI.

- Technology Stack: Our stack includes Snowflake, Tableau, Python, Apache Hadoop, SQL, Dolly, ECS, AWS, LangChain, SBERT, and Vue, tailored to client needs.

- AI/ML Solutions: We provide AI/ML solutions that solve complex business challenges, optimizing models for measurable outcomes.

- Generative AI & LLM Solutions: We offer custom GenAI and LLM solutions designed for business-specific needs.

- Reporting & Dashboarding: Advanced reporting and visualization services deliver real-time, data-driven insights for better decision-making.

Partner with Ideas2IT to design and implement an AI data infrastructure that scales as your business grows. Contact us today to scale your AI initiatives.

Conclusion

To effectively scale AI, businesses must move beyond outdated BI pipelines that simply can't support the demands of modern AI workflows. Legacy systems fall short in areas like real-time data processing, data quality, and expandability, which are crucial for AI success.

Transitioning to AI-ready infrastructure focused on real-time ingestion, modular architecture, cloud flexibility, and strong data governance is key to future-proofing your AI initiatives. For organizations looking to move from pilot projects to large-scale AI deployments, this shift is essential for optimizing performance, minimizing risks, and achieving long-term success in a data-driven world.

.png)

.png)

.png)

.png)

.png)