Deploying LLM Powered Applications with HuggingFace TGI

TL'DR

Now that the adoption cycle of LLMs in enterprises is maturing, effective MLOps for LLMs are the need of the hour. At Ideas2IT we are constantly exploring potential platforms for deploying and scaling LLM models for customers.

Recently for one of our projects, we adopted TGI from HuggingFace with great success. This blog describes TGI and how we leveraged it to deliver a complex LLM based chatbot for our enterprise customers.

What is TGI ?

In light of the recent introduction of Meta's Llama2 and a variety of open-source models, LLM-OPS is gaining increasing attention. These LLMs are simplifying the creation of internal chatbots. Yet, the process of implementing them within a production environment remains intricate and demanding.

TGI is a promising platform for large scale LLM implementations. To put it simply, Text Generation Inference (TGI) offers a user-friendly interface for engaging with the newly unveiled LLMs.

LLM powered chatbot with TGI - Architecture

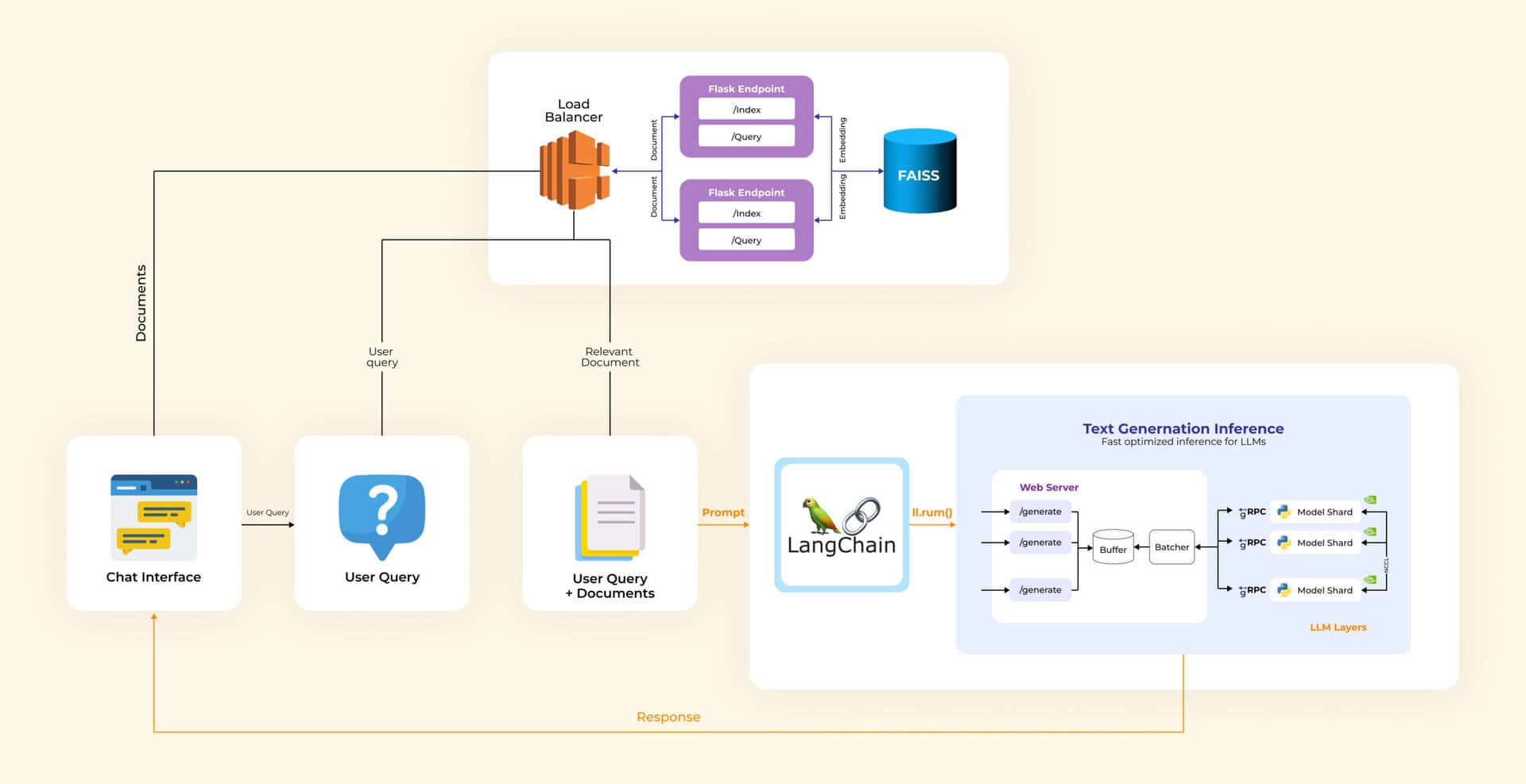

The architectural representation illustrates a scenario of text-based chat, employing a three-tier structure (Front End, Embedding Layer, and LLM Layer).

The LLM layer is seamlessly integrated with TGI, enabling effortless scalability to accommodate varying workloads.

Why TGI ?

The Rationale Behind Choosing TGI

When delving into deployment considerations, several critical factors come to the forefront:

- Scalability: TGI addresses the crucial aspect of scalability, allowing for smooth adjustments in response to changing demands. Its compatibility with a Kubernetes-based architecture facilitates deployment within Kubernetes pods, streamlining the process of scaling up or down as needed.

- Batching: TGI is equipped to support batching, an essential feature for optimizing the processing of multiple requests simultaneously. By leveraging the capabilities of the TGI router, efficient batching and queuing of requests become achievable.

- Queuing: TGI's queuing functionality further contributes to the robustness of the deployment process. The ability to manage and organize incoming requests in a queue ensures a structured and orderly processing flow.

- Streaming: The integration of a streaming interface within TGI allows for real-time communication of LLM-generated tokens to the user interface. This real-time streaming enhances the interactive experience and provides dynamic, instant results.

- LLM Support: TGI boasts comprehensive support for a diverse range of LLMs, including notable models like LLaMa2 and Falcon. The integration of these various LLMs enhances the versatility of TGI, making it a powerful choice for accommodating different use cases.

In particular, TGI's compatibility with Kubernetes-based architecture, its support for batching and queuing, real-time streaming capabilities, and its expansive range of LLM compatibility collectively position it as an optimal solution for effective text generation inference.

How to set up TGI ?

Setting up TGI is a straightforward process. If you have CUDA 11.8+ available on your machine, you can establish TGI with a single command. Here's how:

TOKEN=

MODEL=meta-llama/Llama-2-7b-chat-hf

docker run --gpus all \

--shm-size 1g \

-p 8080:80 \

-e HUGGING_FACE_HUB_TOKEN=$TOKEN \

-v $volume:/data \

ghcr.io/huggingface/text-generation-inference:0.9.3 \

--model-id $MODEL \

--sharded true \

--num-shard 4 \

--max-batch-prefill-tokens 512 \

--max-batch-total-tokens 1024 \

--max-input-length 512 \

--max-total-tokens 1024

That's it! With these steps, your model will be up and running, ready to be served through the REST endpoint.This setup process makes your model accessible for text generation and inference via the provided REST endpoint, offering a streamlined way to harness the power of TGI for various applications.

Leveraging TGI for seamless interaction

To further simplify the process, TGI offers a convenient streaming client that enables effortless interaction with the LLM.

With just a few lines of code, you can perform LLM inference with streaming capabilities:

from text_generation import Client

text = ""

for response in client.generate_stream("What are the ICD10 codes?", max_new_tokens=20):

if not response.token.special:

text += response.token.text

print(text)

In response, the generated tokens are instantly streamed back to the user.

License

TGI operates under an HFOIL license. For comprehensive details, please refer to the license agreement.

.avif)

.png)

.png)

.png)

.png)

.png)