Everything You Should Know About Apache Kafka

TL'DR

Discover everything you need to know about Apache Kafka, the popular distributed streaming platform. From its architecture to use cases, this comprehensive guide covers all the essential aspects of Kafka for beginners and experts alike.

Apache Kafka?

Apache Kafka is a distributed data streaming platform that can publish, subscribe to, store, and process streams of records in real time. It is designed to handle data streams from multiple sources and deliver them to multiple consumers.It is an open-source software platform developed by Apache Software Foundation and written in Java and Scala. It started as an internal system developed by LinkedIn.

Understanding Event Data Streams

To understand the events better, let us take an example of a train running from CHENNAI to KANYAKUMARI. We log the position of the train every 20 seconds. With this said, we will have a stream of the train's location, which could be used for the below purposes.

- Notify passengers timely (Notification Service)

- Calculate the travel time trends (Analytics Service)

- Alert the passengers waiting in the upcoming stations. (Alerts Service)

- Manage train traffic effectively (Traffic Manage Service)

Every 20 seconds, the location data is transferred to the data stream platform, which many services could use for various purposes. Here the sequence of location data can be called a Data Stream.

Structure of Apache Kafka

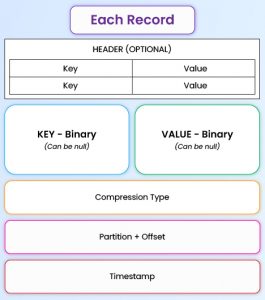

Record Structure

Topics and Partitions

A Topic is a Stream of data representing a particular process (for example, Logs, Orders, Locations, etc.)

- We can consider a Topic as a table without constraints or relations.

- We can store any kind of data into a Topic (anyway, the data will be converted to bytes before persisting into the topics).

- We can have as many topics in a Kafka cluster, and unique names within a Kafka cluster define them.

- Topics are immutable, so once data is written into a partition, it cannot be changed.

- By default, data is persisted for one week in a topic, and it can be changed in configuration.

A Topic is split into Partitions where the data within the partition is ordered. Each message within a partition will be allocated with an incremental ID called Offset.

Cluster

A Cluster is a collection of Kafka Brokers, Topics, and Partitions. They are used to manage the persistence and replication of data, so if a Broker goes down, another Broker can be used to deliver the same service without any delay.And the purpose is to provide highly distributed workloads among replicas and partitions.

Brokers

Kafka Server is known as Broker, which oversees the Topic’s Message Storage. Brokers are responsible for managing the streams of messages and maintaining the state of each partition in the cluster.

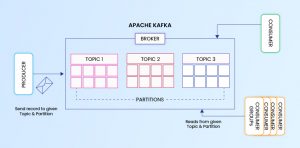

Producer – Consumer

A Producer acts as a data source of the data stream. It writes, optimizes, and publishes messages on one or more topics.It also performs operations like serializing, compressing, and loading balance data among brokers through partitioning.A Consumer reads the data stream from one or more topics and processes them as they come in. Consumers have the option of reading messages starting at a certain offset or from any offset point they desire.

Topic Replication

To ensure the high availability of data, Kafka uses the replication concept to always ensure the high availability of data via the Kafka Replication Factor.Kafka Replication Factor refers to the multiple copies of data stored across several Kafka brokers. Setting the Kafka Replication Factor allows Kafka to provide high availability of data and prevent data loss if the broker goes down or cannot handle the request.For example, A Topic with a Kafka Replication Factor of 2 will have one additional copy in a separate Broker.Note: A replication factor cannot exceed the number of Brokers.

Zookeeper

Zookeeper is a centralized service for maintaining configuration information, naming, and Kafka cluster details. It provides coordination for the cluster of servers running Apache Kafka.It keeps track of the Brokers of the Kafka Clusters. It determines which Brokers have crashed and which Brokers have just been added to the Kafka Clusters, as well as their lifetime since it also maintains the clusters state.

Data Flow in Apache Kafka

Now we can see an example of how a record is handled by a Broker (Kafka server) at a high level. In the example below, where we have a broker with three topics, where each topic has 8 partitions.

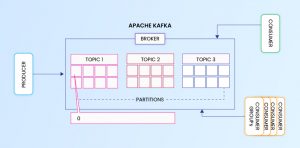

Now the producer sends the record to Partition 1 in Topic 1, and since the partition is empty, the record will be stored in offset 0.

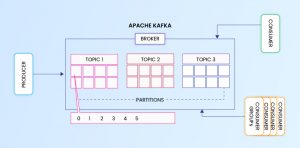

The next incoming record added to partition 1 will be stored in Offset 1, and the next incoming record at offset 2 and so on. This is the same offset that the consumer uses to specify where to start reading the record.

Here's a Real-time ExampleTo explain the usage of Apache Kafka, we can take an example where our goal is to track the user's clicks on the website. When an event happens on the website (e.g., when someone signed in using google, logged out, when someone pressed a button or when someone commented on a post, etc.), a tracking event and information about the event will be placed into a record. The record will be stored on a specified Kafka topic.For this example, we name the topic "USER_CLICK". We are also partitioning the Topic "USER_CLICK" based on user_id. For example, a User with ID 1 will be mapped to Partition 0, and a user with ID 2 will be mapped to Partition 1 and User ID 3 on Partition 2, and so on. These 3 partitions are split into 2 different machines.A user with user-id 0 clicks on a button on the website. The web application publishes a record to partition 0 in the topic "click" with all the tracking events and information.The record is appended to its commit log, and the message offset is incremented.Now the consumer can pull messages from the "USER_CLICK" topic and show monitoring usage in real-time. Alternatively, it can replay previously consumed messages by setting the offset to an earlier one.

Conclusion

In this blog, you learned about the basics of Apache Kafka and its basic architecture like Topics, Brokers, and Replications. Understanding the basics of Kafka could help you to start your further learning deep in solving big data problems and assist you in handling a stream of data in various use cases.Are you looking to build a great product or service? Do you foresee technical challenges? If you answered yes to the above questions, then you must talk to us. We are a world-class custom .NET development company. We take up projects that are in our area of expertise. We know what we are good at and more importantly what we are not. We carefully choose projects where we strongly believe that we can add value. And not just in engineering but also in terms of how well we understand the domain. Book a free consultation with us today. Let’s work together.