Running LLMs on CPU: A Comprehensive Guide

TL'DR

Imagine having the capability to deploy chatbots directly on your CPU. Surprisingly, not all chatbots necessitate real-time inference with expensive H100 chips! With the strategic selection of an appropriate model and infrastructure, running LLM use cases like chatbots on your CPU is feasible.

A pivotal determinant in this process is the choice of the specific variant within the spectrum of Large Language Models (LLMs). The model's parameter count significantly influences the inference expenses. For instance, scenarios involving tasks like rectifying patient data inaccuracies, altering formats, or extracting essential keywords from discharge summaries can all be accomplished offline, eliminating the requirement for expensive hardware resources, such as GPUs.

Let's dive further into this concept with an illustration involving the extraction of keywords from a discharge summary.

Sample Discharge summary

Below is a provided discharge summary of a patient. The preceding summary comprises a total of 3020 characters, encompassing approximately 727 tokens. In this blog, we will undertake an extensive analysis, comparing the performance of the Llama-2 model on a GPU with that of the llamacpp model on a CPU.

Prompt

Let's prepare a simple prompt that we can feed into LLMs to extract the answers.

You are a helpful and polite assistant. From the given context, extract the answers based on the user question. If the answer is not present in the context, just say "Information not available"

Context:

{context}

Question:

{question}

Answer:

Information extraction using Llama-2

Setup

Llama-2, developed by Meta, has emerged as an open-source Large Language Model (LLM). This model is commercially available and asserts its superiority over GPT 3.5 when it comes to tackling specific tasks. Ensure the torch and transformer packages are installed in the system.

Model Download

Now, let's put our assessment to the test by utilizing the Llama-2 7b chat version of the model, available through the HuggingFace platform. You can access the model via this URL: https://huggingface.co/meta-llama/Llama-2-7b-chat-hf.

Prior to executing this test, it is crucial to emphasize that you must first seek access by utilizing the Meta form provided at the following link: https://ai.meta.com/resources/models-and-libraries/llama-downloads/

Infrastructure

Llama-2 7B model can be loaded using a single GPU (either T4 or A10).

Inference Script

The subsequent inference script is designed to load the Llama-2 7B model on a single NVIDIA T4 GPU and carry out the inference process. The inference script can be accessed from here.

Results

Information extraction using llamacpp

The primary objective of llamacpp is to enable the execution of Large Language Models utilizing 4-bit integer quantization on MacBook or standard windows. Llamacpp employs a distinct model extension referred to as ggml. This extension facilitates the operation of widely recognized open-source models like llama, alpaca, vicuna, and falcon, all of which can be run effectively on CPU-based machines.

Checkout llamacpp github for more details - https://github.com/ggerganov/llama.cpp

Setup

Setting up llamacpp is fairly simple and straightforward. Clone the llamacpp repository using the terminal and run the make command

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

make -j

Subsequently, an executable named "main" will be produced within the directory, serving as the tool to execute inferences.For those who might not be acquainted with the CPP version and its configuration, an alternative exists in the form of Python bindings for llamacpp. You can access further information on this by visiting the repository abetlen/llama-cpp-python - https://github.com/abetlen/llama-cpp-python .

Furthermore, the installation of the llama-cpp python package is made convenient with a simple pip command:

pip install llama-cpp-python

Model Download

As mentioned previously, llamacpp operates with a distinct file format known as ggml. To proceed, let's acquire the ggml versions of both llama-2 7b and 13b models from the huggingface platform.

Various quantization methods are accessible for downloading. For our purposes, we will opt for the Q4 quantized version.

Reference - https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGUF

Inference Script

Certainly, let's craft a straightforward and reusable script named "inference.py" to facilitate the testing of our provided questions. This script will enable streamlined inference using llama-cpp-python. The inference script can be accessed from here.

Result

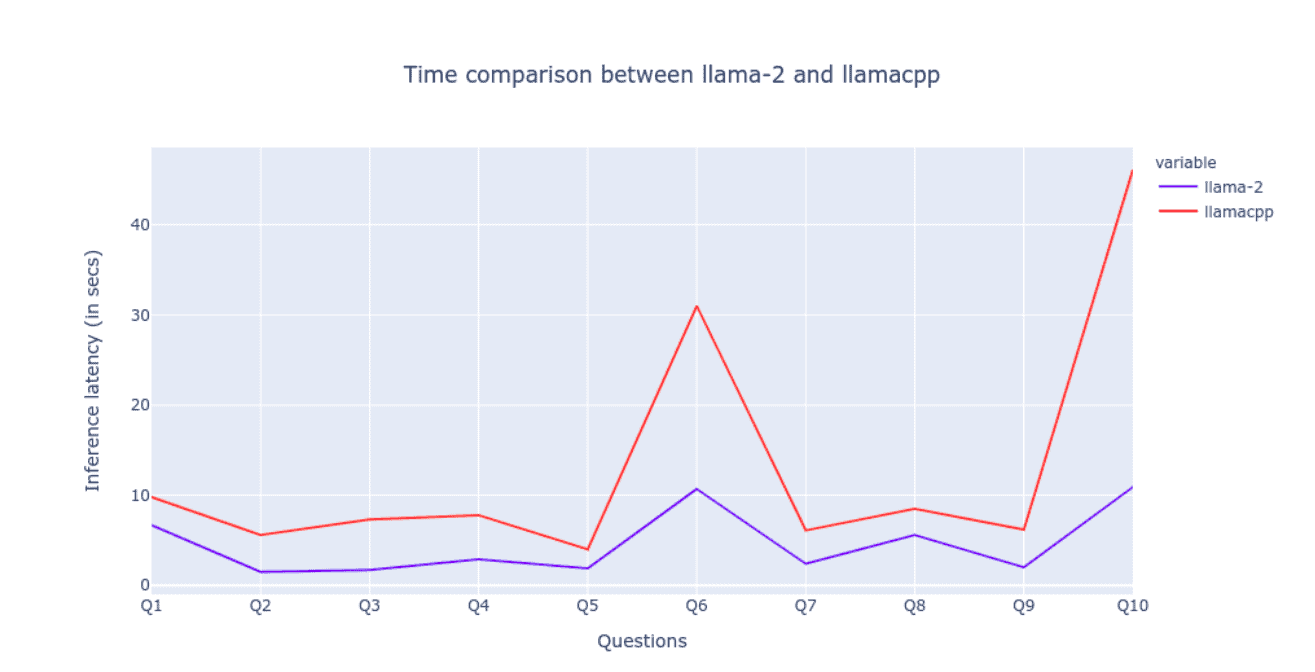

Inference Latency Comparison

The depiction clearly demonstrates that llamacpp requires more time when generating longer texts. However, for the remaining questions, the time taken by llamacpp and llama-2 exhibits a notably closer alignment.

Cost Analysis

Running llama-2 on a GPU (AWS - g4dn.xlarge) entails an hourly expense of $0.526, which accumulates to an estimated annual cost of $4607.

On the other hand, hosting llamacpp on a CPU instance (AWS - t3.xlarge) incurs a charge of $0.1664 per hour, translating to an approximate annual expenditure of $1457.

This translates to a reduction of over one-third in the overall cost. It's important to note that this analysis is an approximate assessment based on AWS infrastructure rates.

Conclusion

In conclusion, our exploration into the realm of Large Language Models (LLMs) has illuminated several critical considerations. Llamacpp can be used to build strong applications, like Freedomgpt, which can run effectively on your cpu. While the utilization of llamacpp offers significant cost reductions in terms of infrastructure, it's important to acknowledge the trade-offs associated with its implementation. The adoption of 4-bit quantization, while reducing model size, concurrently introduces longer inference latency and diminished accuracy. This underscores the need for a well-informed decision-making process when opting for llamacpp.

References:

- Llama 2 - https://ai.meta.com/llama/

- Llama-CPP - https://github.com/ggerganov/llama.cpp

- Llama-CPP python - https://github.com/abetlen/llama-cpp-python

- Freedom GPT - https://github.com/ohmplatform/FreedomGPT

.avif)

.png)

.png)

.png)

.png)

.png)