Building a Business Case for AI/ML: 5 Key Principles

TL'DR

Most management teams recognize that investment in innovation is needed for long term success. Artificial Intelligence or machine learning (AI/ML) projects are seen as essential to innovation.

The Current State of AI and ML Business Cases

First, let us look at the state of business cases for AI and machine learning today. Let us start with Gartner

"Business cases for AI projects are complex to develop as the costs and benefits are harder to predict than for most other IT projects,” explains Moutusi Sau, principal research analyst at Gartner. "Challenges particular to AI projects include additional layers of complexity, opaqueness and unpredictability that just aren’t found in other standard technology.”

Forbes Insights also identified that the urgency for AI projects was greater amongst IT stakeholders and less so amongst C-Suite and even less among the board of directors.

"While 45% say IT stakeholders express “extreme urgency” that AI be applied more widely within their firms, only 29% see that same sense of urgency among their C-suite (the percentage is even lower among boards of directors—10%).”

On the other hand, technology companies like Apple, Google, Amazon, and Netflix are pouring billions into AI projects. But the outcomes of many mega projects are likely to be judged over a long term horizon of ten to fifteen years.

The recommendation systems on Netflix, Amazon or Apple News powered by machine learning have been outstanding successes. But in other areas, the jury is out. Apple’s autonomous car effort, which was called the mother of all AI projects, has been scaled down. There have been resets on when driverless cars will become available. Plans for large scale AI projects such as the launch of autonomous cars are even further delayed, and the business case may be weak.

“With autonomous vehicles, “you may find yourself in a company that requires billions of dollars of capital,” with no clear timeline for building a large business or seeing a return on the investment, said Aaron Jacobson, a partner at NEA.”

It is also harder to apply past templates to easily create business cases for AI and machine learning projects. For example, a business case for mobile projects was easy. You looked at the functionality that was there on the web and simply ported it to mobile.

The front end projects were easy to justify as well. They would give a better user experience, and that mattered a lot to business sponsors. Projects to meet a regulatory need have the easiest business case. Reporting projects and data warehouses had already made a business case. They do not truly qualify as AI.

Based on all this, here are some preliminary conjectures:

- Simpler AI use cases, like recommendation systems seem to have a solid business case. But the adoption has still been limited to a small percentage of companies.

- Complex Artificial Intelligence such as autonomous cars are more difficult than what the best engineers imagine them to be.

- Technology companies are pouring billions of dollars on AI projects because they simply have the luxury to do so. Their businesses have tremendous free cash flow. Hence they are able to invest in technologies like artificial intelligence that are used in driverless cars.

- It appears that there are few AI or machine learning projects in IT that have a solid business case and that could be done with little risk.

5 Key Principles To Build a Business Case For AI/ML

So how do we make a business case for AI or machine learning in IT? Is this a lost cause with a huge career risk? Read on to explore some powerful techniques for making a business case for AI and machine learning.

First Principle

So let us understand what applying first principles means. Elon Musk explains what first principles.

“It’s most important to reason from first principles rather than by analogy. The normal way we conduct our lives is we reason by analogy. We’re doing this because it’s like something else which was done or it’s like other people are doing. It’s mentally easy to reason by analogy rather than by first principles. First principles is the Physics-way of looking at the world. What that really means is that you boil things down to the most fundamental truths and then reason up from there. That takes a lot more mental energy.”

So if we reason by analogy, we are likely to fail in building a business case for AI. The approach to building a business case for mobile enablement or handling regulatory requirements will not work.

First principles can also be understood as deconstruction and then the reconstruction. Thus you break down something into smaller building blocks and construct something new with those building blocks.

Step 1: Deconstructing AI

So applying that to Artificial Intelligence, you could ask what is Artificial Intelligence? Unfortunately, even some of the founders of AI realize that no one has clearly identified what comes under Artificial Intelligence. For the moment, let us consider the following categories:

- Machine learning and Neural Networks

- Natural Language Processing

- Robotics

- Fuzzy logic

Machine Learning and Neural Networks

Machine learning can be described as a system of prediction based on previously available information and discovered patterns. The prediction can be of two types:

- Prediction or probability of an event happening. Will an event happen or will it not happen? The technical name of a predictive model of this type is a classification model.

- Prediction of a value. The technical name for a predictive model of this type is a regression model.

Once we have deconstructed machine learning to a system of prediction, it becomes a lot simpler. There are various types of machine learning models. The most popular ones are Scorecards (linear models), Decision Trees (Classification And Regression Trees or CART) and Artificial Neural Networks (ANNs). All of them either predict the probability of an event happening or predict a value.

Natural Language Processing

Natural Language Processing or NLP is a field of Artificial Intelligence that gives the machines the ability to read, understand and derive meaning from human languages. NLP has major applications including AI chatbots, Grammar checking, voice bots responding to human commands and so on.

The role of Artificial Intelligence with IT departments in 2024 has largely been in machine learning and natural language processing.

Robotics

Robotics involves the design, construction, operation, and use of robots to perform tasks in various environments. Unlike traditional automation, robotics enables machines to interact with the physical world autonomously or semi-autonomously. Applications range from manufacturing and logistics to healthcare and space exploration. Robotics integrates principles from mechanical engineering, electrical engineering, and computer science to create intelligent systems capable of executing complex tasks with precision.

Fuzzy Logic

Fuzzy logic is a form of multi-valued logic that deals with reasoning that is approximate rather than fixed and exact. It is particularly useful in systems where inputs may not have clear-cut boundaries or are inherently uncertain. Fuzzy logic allows for degrees of truth, which enables more nuanced decision-making compared to traditional Boolean logic. Applications of fuzzy logic include control systems, where precise control is challenging due to imprecise or ambiguous data, as well as in decision support systems and artificial intelligence.

Step 2: Reconstructing AI with business problems

Now that we have deconstructed AI to a degree, how do we use that to create a business case? We do that by reconstructing it with known business problems. For example, if you run a hospital you would have the health information of your patients.

You could create a machine learning model and predict which of your patients could be at risk of heart disease or diabetes based on their health information, demographics etc.

If you are a retailer and you are sending a discount coupon to your loyal customers, you could create a machine learning model that uses their past purchase data to predict which of your customers is likely to redeem your coupon and also estimate their purchase value.

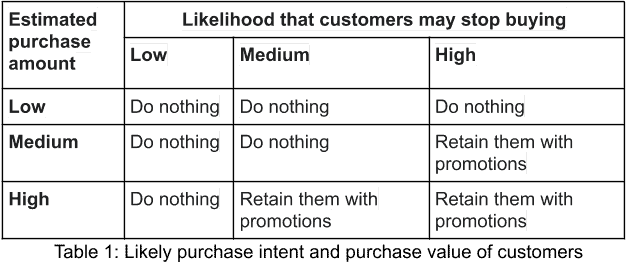

Most retailers segment their customers to target them uniquely to increase sales etc. We can apply machine learning to look at customer purchase data and see what promotions the retailer can offer to increase sales. The retailer could look at the past purchase data of customers and apply first principles to develop two machine learning models:

- A classification model that will predict those customers who are likely to stop buying. This is known commonly as an attrition model.

- A regression model that would predict the purchase value of customers. This is commonly known as a revenue model.

These two models can be combined together and we get a table that gives the customer’s purchase intent and purchase value. Combining these two models we have overall nine sub-segments where we can even apply automated decision rules to retain customers by offering them special promotions.

The above example showed how first principles can be used to create a business case for machine learning. Now we will explore techniques of how we can make an even more compelling business case for machine learning.

Pareto Principle - 80/20

Vilfredo Pareto, an Italian economist noticed that approximately 80% of Italy's land was owned by 20% of the population. He then carried out surveys on a variety of other countries and found to his surprise that a similar distribution applied.

The management consultant, Joseph Juran stumbled across the work of Vilfredo Pareto and began to apply the Pareto principle to quality issues (for example, 80% of a problem is caused by 20% of the causes).

So focusing on 20% of the causes could produce dramatic results. His techniques were adopted by Japanese automobile manufacturers and leaders from all over the world. Management consultant, author, and investor, Richard Koch noted the even broader applicability of the 80-20 principle.

Thus Richard Koch notes that: "In business, many studies have shown that the most popular 20% of products account for approximately 80 percent of the sales; and that 20 percent of the largest customers also account for about 80 percent of sales; and that roughly 20 percent of sales account for 80 percent of the profits”.

He gives more examples:

"80 percent of crime will be accounted for by only 20 percent of criminals, that 80 percent of accidents will be due to 20 percent of drivers, that 80 percent of wear and tear on your carpets will occur in only 20 percent of their area, and that 20 percent of your clothes get worn 80 percent of the time”

He adds a caveat that ‘80/20’ is not a magic formula. The actual pattern is very unlikely to be precisely 80/20. Sometimes it is 70/30 and in extreme cases such as the internet due to network effects, it could even be 95/5.

Thus based on this principle one can find opportunities for finding the ‘relevant few’ who need great attention. Let us take an example to see how the 80/20 principle can be applied. Say a specialty retailer sees the 80/20 principle as a starting point and makes a hypothesis that 20% of its customers lead to 80% of his sales. And then there are the remaining 80% of the customers who contribute to only 20% of the sales.

And since retail is a very competitive market, where the cost of customer acquisition is very high, the retailer would not want to lose any of those high-value customers. The retailer could run a report on the value of his sales and may look for what percentage of customers led to 80% of the retailer’s sales.

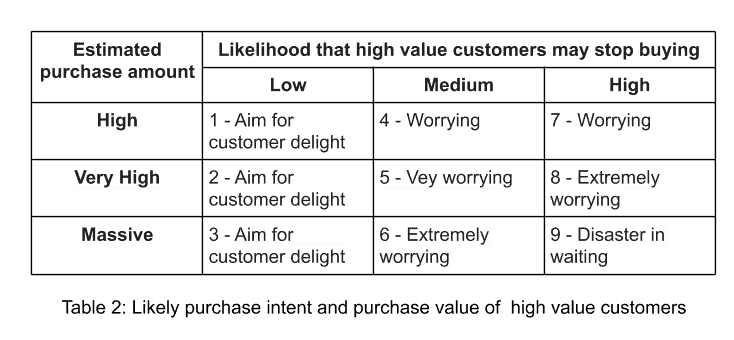

The retailer may find that it maybe 20% or it could even be 30%. We noted earlier the 80-20 rule is not exact. These are the retailers' high-value customers who need to be given special treatment. Now the retailer could look at the past purchase data of these high-value customers and apply the first principles to develop two machine learning models just as we did earlier.

Note that now the model is restricted to the high-value customers. So the retailer is now likely to get a two-dimensional matrix.

So using the 80-20 principle, applying first principles and machine learning gives the retailer very powerful insights. The retailer can now employ highly personalized strategies in each of these 9 different sub-segments. Keep in mind, that applying the 80-20 principle multiplied the power of machine learning perhaps by an order of magnitude.

Had the 80-20 principle not been applied, and had the machine learning model been targeted at the whole customer base rather than the top 20%, high-value customers the decision matrix would have very well looked something like Table 1 that we covered earlier. The 80-20 principle is also a power law.

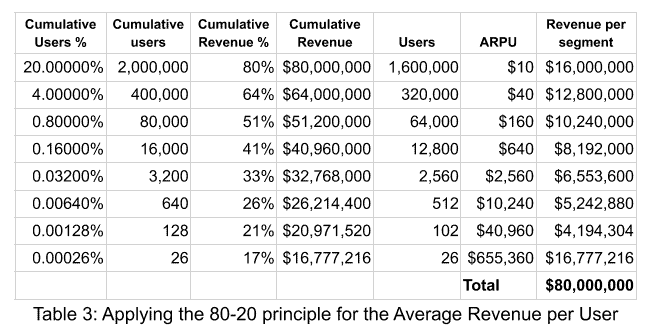

Thus 20% of the customers give 80% of the business. It follows that 20% of the 20% that is 4% of the customers would give 64% of the business. Expanding that further, 0.8% of the customers would give 51% of the business.

Thus 0.16% of the customers would give 41% of the business. And this goes on. Now it would be logical to expect that the behavior and purchase data of the 0.16% of customers who give 41% of the business would be very different from 80% of the customers who give 20% of the business. Thus it is likely that the machine learning models that we created would be more accurate when they are applied to smaller slices of the population.

The applicability of power laws is a little hard to grasp. To make it easier to understand, so let us use the same example. Let us assume that the retailer has 10 million customers. The total annual revenues are $100 million. So the average revenue per user (ARPU) over a year is $10. All this looks very reasonable and when we apply the 80-20 principle, the data comes to life.

Now it tells us something very interesting. At one end of the 20% of the users, 1.6 million users on average spend $10. At the other extreme, 26 users on average spend $655,360. In the real world the 80-20 might not apply directly, it maybe 70-30 or 60-40 but applying the 80-20 principle illuminates the data in an entirely new way.

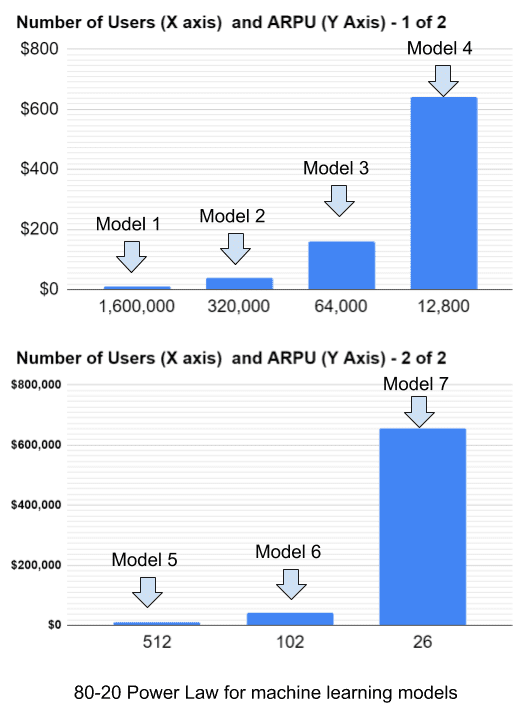

Now when we chart the ARPU against each of the user segments we clearly see the power law at work. Please note that the same chart is split into two due to the exponential nature of power laws.

Now you could ask, alright this looks cool. What is the relevance to machine learning? And indeed there is a powerful relationship between them. One can build custom machine learning models on each of the sub-segments of customers. I.e. one for the 1.6 million customers whose ARPU is $10, another model on the 320,000 users whose ARPU is $40, another model on the 64,000 users whose ARPU is $160 and so on.

Each of these models is likely to be more accurate than one model built for high-value customers. And then since each of these sub-segments have different characteristics and propensity to buy, even different types of models for achieving different goals can also be built. And since these customers are the vital few, the possibilities are just endless.

So we reach some very powerful conclusions:

- Combining the 80-20 principle with machine learning and first principles is very powerful and can help build a solid business case for a machine learning project.

- Applying machine learning to smaller slices of the data, the vital few that matter may lead to more accurate machine learning models. They will amplify the power of machine learning and make the business case even stronger.

- The 80-20 principle is a power law that can be applied almost infinitely. This implies that we may be able to create custom machine learning models on smaller and smaller slices of data on the vital few. The accuracy of the models would keep increasing up to the point that the dataset becomes too small. At that point, the accuracy may start dropping.

One related point as we start applying machine learning models down the 80-20 power curve is that one would need an elaborate way of managing the models and MLOPs would become increasingly important.

Five whys’ Technique

Five whys (or 5 whys) is an iterative interrogative technique used to explore the cause-and-effect relationships underlying a particular problem. The primary goal of the technique is to determine the root cause of a defect or problem by repeating the question "Why?".

Each answer forms the basis of the next question. The "five" in the name derives from an anecdotal observation on the number of iterations needed to resolve the problem. The five whys can be used to determine the root cause of a problem.

In some cases, the solution to the root cause of a problem could be through AI or machine learning. Let us for a moment assume a hypothetical scenario that the retailer rolled out a machine learning solution to a segment of customers in Table 2.

To increase sales for customers in Box 4 the retailer sent a personalized email with a personalized coupon to customers. Since the retailer knew the likely purchase amount, the retailer decided to give a coupon that was a 20% discount on the likely purchase amount rounded off to the nearest $5.

The customer simply had to simply make a purchase to redeem the coupon that was in values such as $5, $10, $15, and so on. The coupon was sent through a highly personalized email to the customer. The coupon was also shown when the customer visited the retailer’s website.

However, after the promotion ended, it was found that the coupon redemption rate was no greater than in the past. The five whys technique can be used to identify potential causes of why the redemption was not greater. Personalization of coupons did not lead to increased redemption.

- Why #1 - The personalization was only limited to name and the likely purchase amount.

- Why #2 - The machine learning model only predicted the purchase amount and likelihood of purchase.

- Why #3 - The scope of the machine learning project was limited to only segmenting customers by their likelihood of purchase and the likely purchase amount. Retaining customers by making personalized offers based on past purchase data was out of scope for the machine learning project.

- Why #4 - Only aggregate purchase amounts by year were available in the data platform. The exact purchase history of items bought by the customer was not available in the data platform.

- Why #5 - The data platform was built on a tight budget. Personalization using prior purchase history was considered as a capability that would be added in the future and hence kept out of the scope of the data platform.

Thus if the data platform had the purchase history, then knowing the likely budget of the customer, a machine learning algorithm could look at the purchase history of similar customers.

Then assign probabilities of purchase of the most popular items the customer has not bought yet and then make a recommendation of a few items that have the highest probability of purchase by the customer. Thus the coupon could have gone to the customer alongside a product recommendation of the products the customer is most likely to buy.

This would have increased the probability of the customer redeeming the coupon and being retained as a customer. It follows that the five whys technique could be used to make a business case for enhancing the data platform and using a machine-learning algorithm to make more personalized offers.

The five whys technique can be used by itself or in conjunction with the Fishbone diagram or Ishikawa diagram to find even more potential root-causes of problems. And we will cover that next.

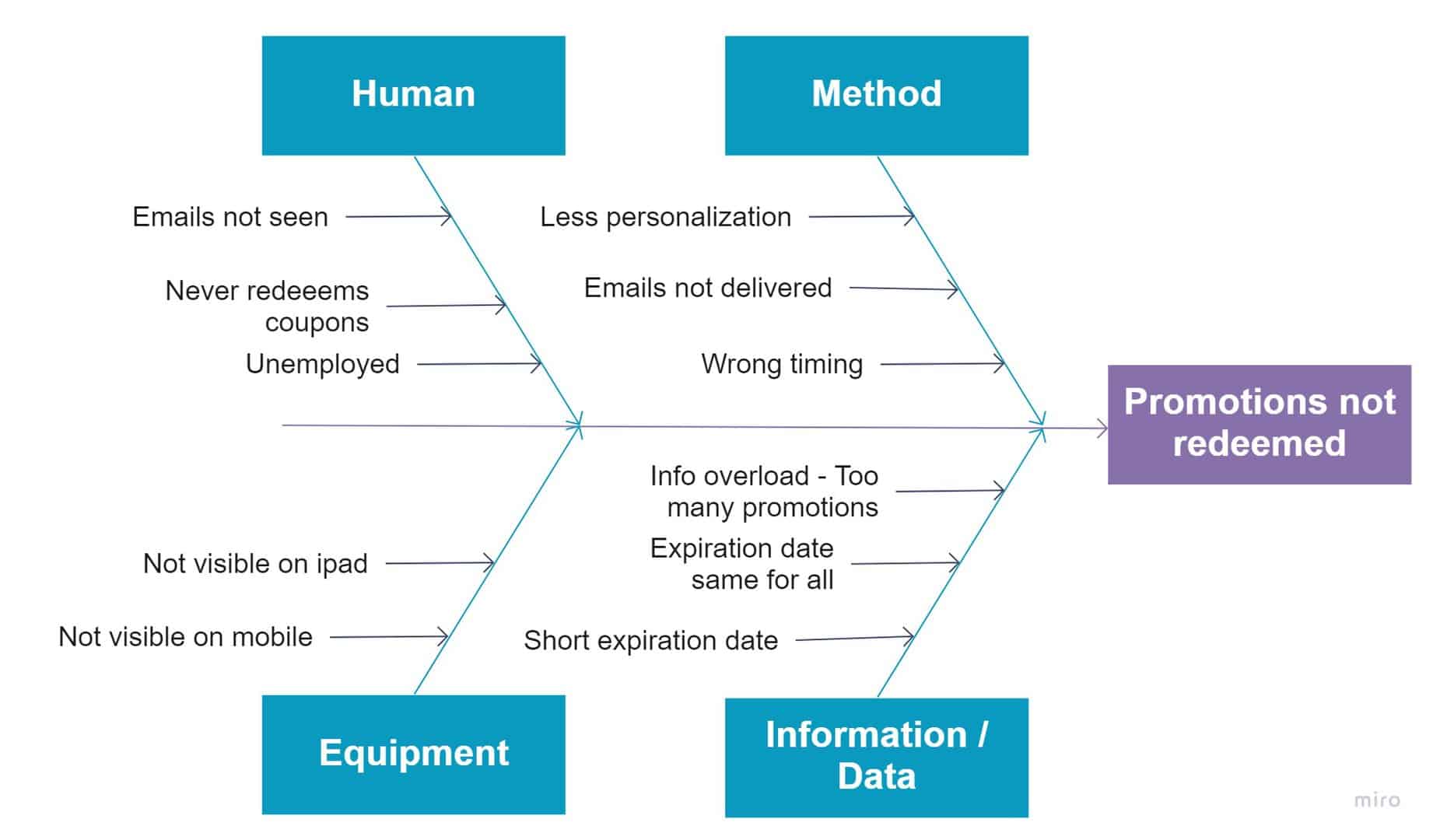

Fishbone Diagrams

Fishbone diagrams or Ishikawa diagrams or cause-and-effect diagrams are causal diagrams created by Kaoru Ishikawa that show the potential causes of a specific event. The defect is shown as the fish's head, facing to the right, with the causes extending to the left as fishbones; the ribs branch off the backbone for major causes, with sub-branches for root-causes, to as many levels as required.

There are different ways of identifying the main causes extending to the left. In manufacturing, the following are used:

- Man/mind power (physical or knowledge work, includes: kaizens, suggestions)

- Machine (equipment, technology)

- Material (includes raw material, consumables, and information)

- Method (process)

- Measurement / medium (inspection, environment)

In Product Marketing, the following are used:

- Product (or service)

- Price

- Place

- Promotion

- People (personnel)

- Process

- Physical evidence

- Performance

Taking the earlier example of the retail promotions not getting redeemed, one can draw a fishbone diagram of the probable causes why the coupons did not get redeemed.

Once the main causes are identified, each of the causes can be analyzed further with the five whys technique to find the root cause. For example, the issue of coupon expiration date being the same for all could lead to a machine learning project. The coupon expiration date being the same for all customers led to some customers not redeeming the coupon.

- Why #1 - Some customers redeem the coupon within a few days of receiving the coupon while others shop only during a long weekend and as a result, they do not redeem the coupon.

- Why #2 - If this was known, why was the coupon sent with a fixed expiration date.

- Why #3 - A fixed expiration date coupon was sent because the information on which customers specifically shop mostly during the long weekend was not available.

- Why #4 - Why was the information on the dates when a customer is most likely to buy not available?

- Why #5 - There were no machine models available that predicted the most likely days the customer is likely to shop.

We can build a business case for customized coupon expiration dates for customers.

Customers who wait for long weekend shopping seasons to make a purchase are given an expiration date that expires a few days after a long weekend shopping season while customers who don’t wait for long weekend shopping seasons to shop, can be given a coupon that expires independently of a long weekend shopping season.

Margin of Safety and Outsourcing

Once a problem for AI or machine learning has been identified then the next step is to calculate the Return on Investment or the Return on Capital Employed. Another technique is to calculate the Net Present Value of the Investment of the project.

If the Net Present Value is positive, then one can proceed with the project. So to estimate the Return on Investment, one needs to estimate the future financial benefits from the project, the future running cost, and the upfront investment needed. Another aspect that needs to be looked into is the overall project risk.

AI and machine learning projects have two additional kinds of risk above and beyond the risk of execution that is always there in IT projects.

The first risk is that our hypothesis of a model being created may not turn out to be true. The second risk is after having gathered the training data we don’t know how well an algorithm will work in practice (the true "risk") because we don't know the true distribution of data that the algorithm will work on.

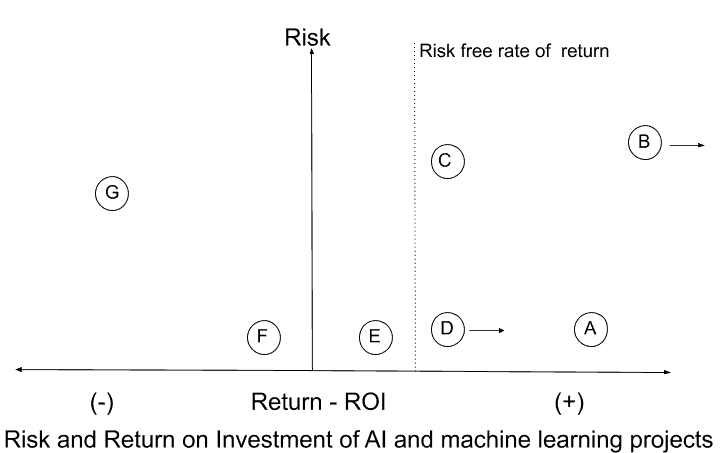

To understand and mitigate these risks let us look at a sample of AI and machine learning projects that we plot against the Risk and the Return on Investment. Keep in mind that the risk and return on investment cannot be precisely estimated.

We also have the Risk-free return, the rate of return we would get for investing our capital in risk-free instruments such as a treasury bond. At a minimum, the ROI should exceed the Risk-free rate of return. Organizations may have their hurdle rate for internal projects and that might be over the risk-free rate of return.

When we overlay the projects against Risk and ROI, we have some interesting observations.

Let us assume that the risk-free rate is the hurdle rate for the organization so that our analysis is simpler. It's obvious that projects G, F, and E are not worthy of investment as their returns are less than the risk-free rate of return and they carry additional risk.

That leaves projects A, B, C and D for consideration. Project A has a reasonable return and low risk, so one can proceed with it. Projects C and D have returns higher than the risk-free return rate. However, project C seems too risky and can be dropped.

And what do we do about projects B and D? One approach is to reduce the risk of both projects and see if that helps us make a choice. But, as we saw earlier reducing the risk for an AI or machine learning project could be a lot harder than reducing the risk of a traditional IT project. For a machine learning project to succeed all three events must work in our favor:

- IT project execution ( such as Data Engineering etc.) ( Known risk)

- The machine learning model should be similar to the original business case hypothesis ( Unknown risk)

- The algorithm should work well in practice (Unknown risk)

The IT project execution risk is known but the other two risks are unknown. And unfortunately, as humans, we are subject to a cognitive bias known as the Conjunctive Event bias.

We overestimate the probability of conjunctive events. With this hindsight, the travails of the autonomous car projects that we covered earlier are more easily understood. While the IT project risk can be mitigated, it is a lot harder to mitigate the next two risks.

So how do we make a business case for a project where two risks are unknown and cannot be fully mitigated? One approach is to take the Margin of Safety approach. This would mean that the ROI should be higher. How can the ROI be increased?

Let us say that the estimated ROI of a project is 12%. If the ROI becomes 24% then the project becomes more compelling. There are two ways to make the ROI higher - one is to enhance the capabilities of the project but that comes with added risk and higher investment. The other is by simply halving the investment on the project by, say outsourcing a part of the project.

This helps in two ways. First, it reduces investment by half. So even if the project fails, the loss is only half. And if the project succeeds the ROI is now double. So outsourcing on AI and machine learning projects is very powerful. It is a no brainer provided it does not increase execution risk. It provides a margin of safety, reduces the investment needed, and could almost double the ROI when the project succeeds.

Thus outsourcing could push projects B and D to the right where there is a higher Margin of Safety and ROI. Our experience with AI projects is that 50-70% of the upfront investment on AI projects goes in Data Engineering. Model building, business analysis, storage costs, CPU/GPU cost, software licenses, and so on are between 30% to 50% of the costs. This is again consistent with the 80-20 principle.

Data Engineering is an activity that is an easy choice for outsourcing and can be outsourced to increase the Margin of Safety and the Return on Investment.

How not to build a case for AI and machine learning projects

Now that we have looked at some ways to build a business for AI and machine learning projects, we will look at some common and obvious pitfalls to avoid.

- Choosing very complex projects: Starting with a complex AI and machine learning project is a sure way to build a weak business case. Thus it is best to find simpler use cases upfront with proven AI and machine learning technologies.

- Not involving all stakeholders: Not involving all stakeholders is a sure way to make a flawed business case. End-users have to see value in the use case. IT should be fully involved so that the data engineering, infrastructure, and model building effort and cost can be estimated accurately. Finance should understand the risks and the ROI.

- Ignoring success stories and failures: Success stories and failures give us a clue on why some things work and some don’t. Ignoring them is a sure way to put up a weak case.

- Simply copying an IT Project business case template: Copying an earlier IT project business case template is another sure way to make an incomplete business case for an AI or machine learning project. AI and machine learning projects have a lot more nuances and risks over and above traditional IT projects and using an old template will lead to an incomplete analysis.

- Glossing over the risks and complexity: AI and machine learning projects have added layers of complexity and risk over and above IT projects. Glossing over them is a sure way to ensure that senior management who evaluate the business case do not appreciate the business value, the complexity and the risks.

Checklist for building a business case for an AI or Machine learning project

6 Key Factors for CIOs Building a Compelling AI Business Case

So we covered a lot of ground on building a business case for an AI or Machine learning project. Here is a simple checklist for building your business case:

- Apply First principles. Deconstruct your business problem and also the branch of AI you plan to use. Reconstruct them together to build a business case.

- Make the business case compelling by applying the 80/20 principle, five whys or the Fishbone diagram.

- Make the business case fail-safe by applying a Margin of Safety. Outsource if necessary to increase the Margin of Safety.

- Avoid common pitfalls by reviewing ‘How not to build a case for AI and machine learning projects’.

- Make a compelling presentation or put together a compelling business case to your management. Remember to include some success stories from your industry or elsewhere.

So good luck in building a business case for your AI or machine learning project.

.avif)

.png)

.png)

.png)

.png)

.png)