How to do Clinical Documentation Comprehension using Spark NLP: A step-by-step guide

TL'DR

In this article, we will talk about end-to-end Clinical Document Comprehension using Spark NLP for Healthcare. Healthcare systems are becoming more and more complex in today’s world. Most healthcare systems are moving to data driven decision making and data analytics is playing a pivotal role in this. But data in healthcare systems could be fairly unstructured and a large part of it is in the form of clinical documents like plan of care documents, discharge summaries, release notes, doctor's prescriptions, etc. Vital information is missed out most of the time because these documents are not understood well. Clinical Document Comprehension is a multifold workflow pipeline. What follows next in this article will help us understand such a workflow on extracting vital information from clinical documents.

Step 1: Spark OCR to Extract Text

As discussed before, clinical documents come in the form of images, PDFs, docs etc. The first step in clinical document comprehension is to extract the whole text from such documents. This step is the beginning of the annotation process. Spark OCR has several features for extraction of these PDFs, docs etc. It has provided state-of-the-art level confidence for the extracted text. The two most important parts of this step are:

- Noise Reduction: Most clinical images or PDFs are noisy. This step cleans the document and makes it ready for extraction.

- Skewness Correction: Scanned images are often skewed. It is advisable to do a skewness correction on all documents.

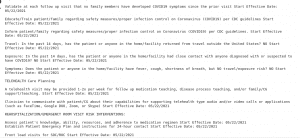

Below, is a sample of extracted text from the PDF of a Plan of Care Document.

We can also see some confidence scores per number of pages in the documents, to see how well the OCR performs. For the same document, the scores after removing noise and skewness correction is given below.

Step 2: De-Identification of PHI

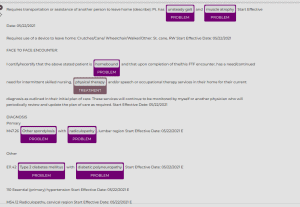

This is an additional step which may or may not be required, based on the study one is doing. This step masks all Personal Information present in the text. Full end-to-end masking of PHI is available at granular level and goes to identify and de-identify as many as 23 entities. Spark NLP also provides features to re-identify the masked entities.

Step 3: Clinical Entity Extraction

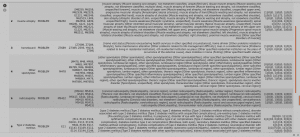

After the text is cleaned and processed in the previous step, the next step is to find out all the named entities in the text. These named entities are present in the form of names of diseases, treatments, names of medicines, and dosage, to name a few. Spark NLP models are trained on a huge corpora of clinical documents. Specific models for medication related models are trained to get posology related clinical entities.Entity Recognition is one of the most important steps in any text annotation process. Later, these entities can be used to build features, etc. for modelling and/or information querying. For example, pologoly entities are used to know what medications are given to a patient, the dosage of such medicines, the form in which the medication is intaken and so on. Some models were applied in the clinical text extracted in the previous step. Below is a snippet of the English Clinical Entity Recognition model.

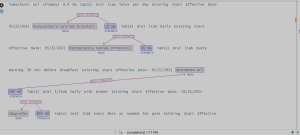

The model identifies the problems and related treatments from the text. Another interesting result on the medications is shown below, where the model not only identifies the names of the medicines, but also goes to a deeper level to extract the route of the medicine, the form and strength in which the medicine is to be consumed, the dose, and frequency of the medicine.

Step 4: Standardizing Disease Codes and Medication Codes

Disease names are standardized in all healthcare systems as ICD Codes, they are universal in nature. From any healthcare document it is very important to first identify the disease and find the associated ICD codes for those diseases. Spark NLP supports the recent ICD-10 codes, which are universally accepted to mark specific diseases. In this step, the whole document is processed to find out the diseases and the codes. In recent releases, Spark NLP has also provided another feature which gives us a Hierarchical Condition Category (HCC) score. HCC is a score which is assigned to each disease to indicate the future cost of claims for the diseases from an insurance company. ICD codes for a disease come with the probable other resolutions related to the disease.

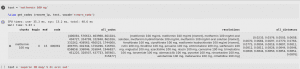

Similarly, medication names are mapped to standardized RxNorms. Below snippet shows the RxNorm deduction.

The model gives one primary RxNorm code for the medicine and along with it, gives RxNorms related to the family of the medicine.

Step 5: Entity Relationship Resolving

The final step in the whole workflow is to identify the relationships between the entities that have been identified. After all the above steps are completed, this step finds the clinical relationship between the extracted entities for the most comprehensive annotation. Different entities could be related to other entities across the text. Some examples of these relationships are DRUG-STRENGTH, DISEASE-TREATMENT, etc. This model can also be used to find temporal relationships between entities, like the occurrence of any clinical event “before” or “after” a disease has been diagnosed, or whether some other condition is present or absent during treatment, etc. Below we have used the same text to identify the relationships. A little snippet is shown below for understanding the extracted relationships.

Adverse Drug Events and side effects are shown below.

The above shows the ADE between the medicines and the side effects.Please bear in mind that the above steps are only a general guideline for clinical document comprehension. Several features can be added in the pipeline for use-case specific purposes.Needless to say, Spark NLP for Healthcare is one of the flagship libraries from John Snow Labs that has pushed healthcare analytics and clinical document comprehension to a new dimension. While making the process easy and complete, it is truly a state-of-the-art tool.Do you want to read more of our articles on Clinical Document Comprehension? Then check the following blogs.

.avif)

.png)

.png)

.png)

.png)

.png)