Patient Appointment No-Shows Prediction

TL'DR

Although the documented rates of missed appointments may vary between countries, appointment-breaking behaviors constitute a widespread, global issue. Studies show that No-Show rates range from 15-30% in General Medicine Clinics and Urban Community Centers, whereas for Primary Care it could be as high as 50%. Unused appointment slots reduce quality of patient care, access to services and provider productivity while increasing losses due to follow-up costs. According to Medbridge Transport, a company that provides transportation services to ASCs in Houston - patients who don't keep their appointments cost the healthcare industry roughly $150 billion annually! Here are 5 statistics from a Medbridge Transport white paper:

- About 3.6 million U.S. patients miss their appointments due to transportation issues

- Patients point to transportation as the reason for missing an appointment 67 percent of the time

- On average, no-shows cost a single-physician medical practice $150,000 annually

- Medical practices actively working to minimize no-shows can reduce no-shows by up to 70 percent

- A practice with multiple physicians could have 14,000 timeslots go unfilled each year

Machine Learning techniques can be used to predict the probability of No-Shows in advance and can help in its reduction, thus saving time, hospital/health care clinic resources, and money.The objective of the Study

- Predict the probability of No-Shows from the patient’s appointment information

- Identify the chief reason for No-Shows amongst patients in the given dataset

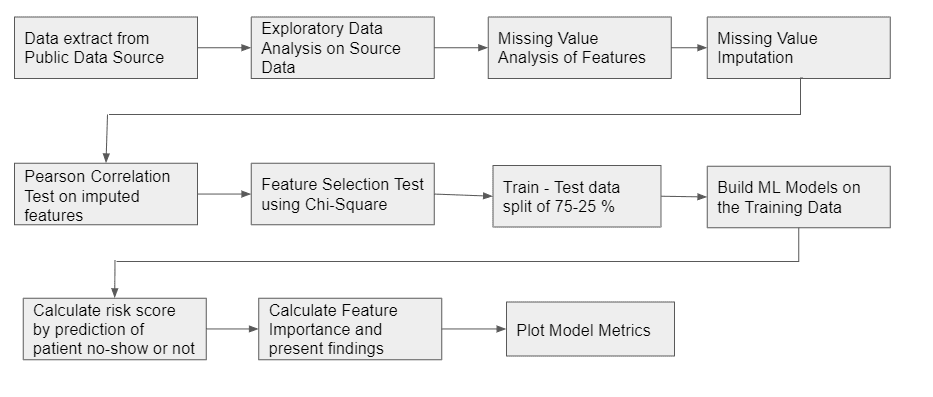

Approach

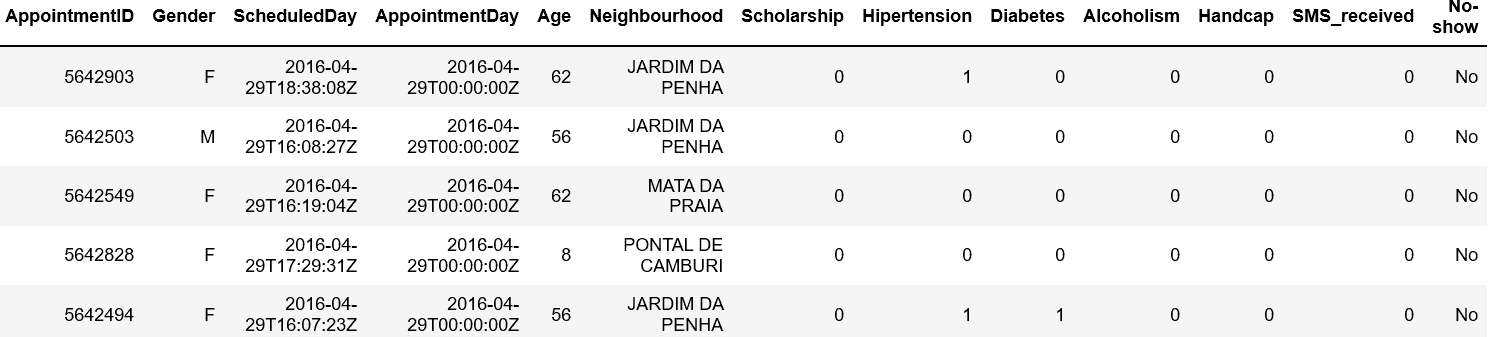

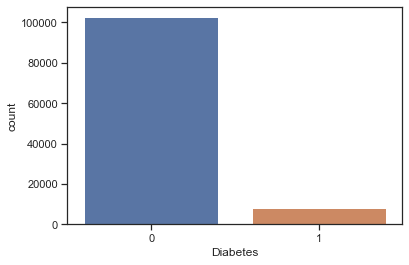

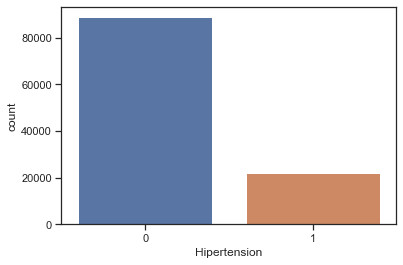

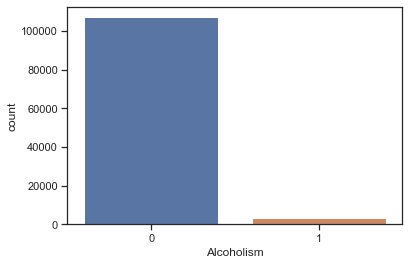

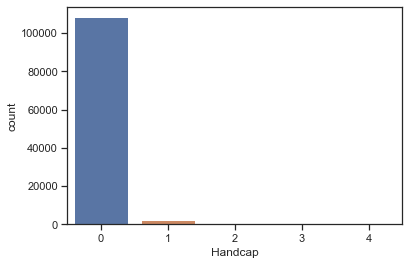

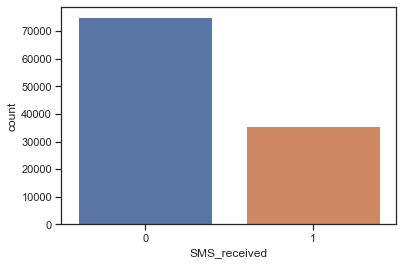

Data DescriptionThe data used for the case study was extracted from the public dataset hosted by Kaggle. The dataset is labeled and describes the no-show during patients appointments. The mean age of patients in the dataset was observed to be 37.08 ± 23.11 years, while the gender ratio (male : female) of the patients was found to be 34:66. Apart from age and gender, Hypertension, Diabetes, Handicap, Scholarship, SMS_Received, Neighbourhood, Appointment day, Schedule Day, AppointmentID, PatientID, Alcoholism along with the No-Show (label) were the other variables present in the dataset. While most of the variables were coded the No-Show label was in the form Yes/No. There were 110,527 medical appointments from a total of 62,299 patients. Sample snapshot of the raw dataset is shown in Figure1.

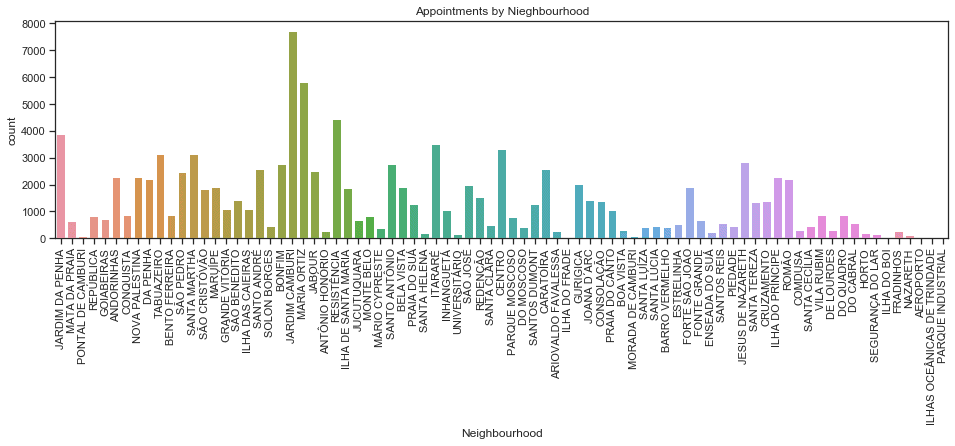

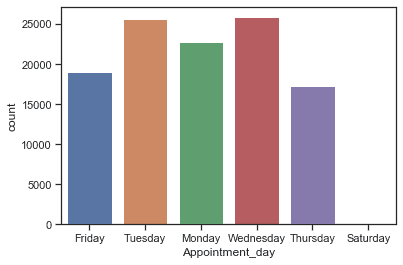

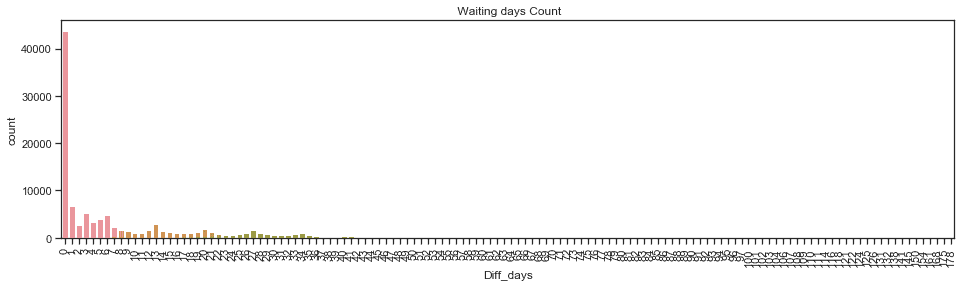

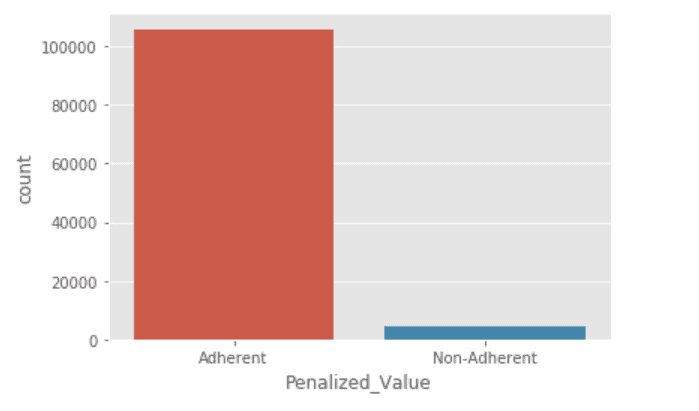

Apart from the variables present in the dataset, variables such as day-difference (Appointment Day to Scheduled day), appointment day of the week and penalized value were computed. Day difference is nothing but the waiting time for the patient before they visit the health center and was computed as the difference between the appointment and the scheduled day. The appointment day of the week is the same as the day name of the appointment day. The penalized value was computed as a function of patients' adherence to appointments. Each patient would have a value and the range of this value is 0-17, where 0 represents the scenario where the patient had either adhered to the appointment or not adhered, while subsequent increment in number indicates the number of appointment non-adherences from thereon. It must be noted that there were no missing values in the dataset.Univariate Data Analysis

Correlation Plot

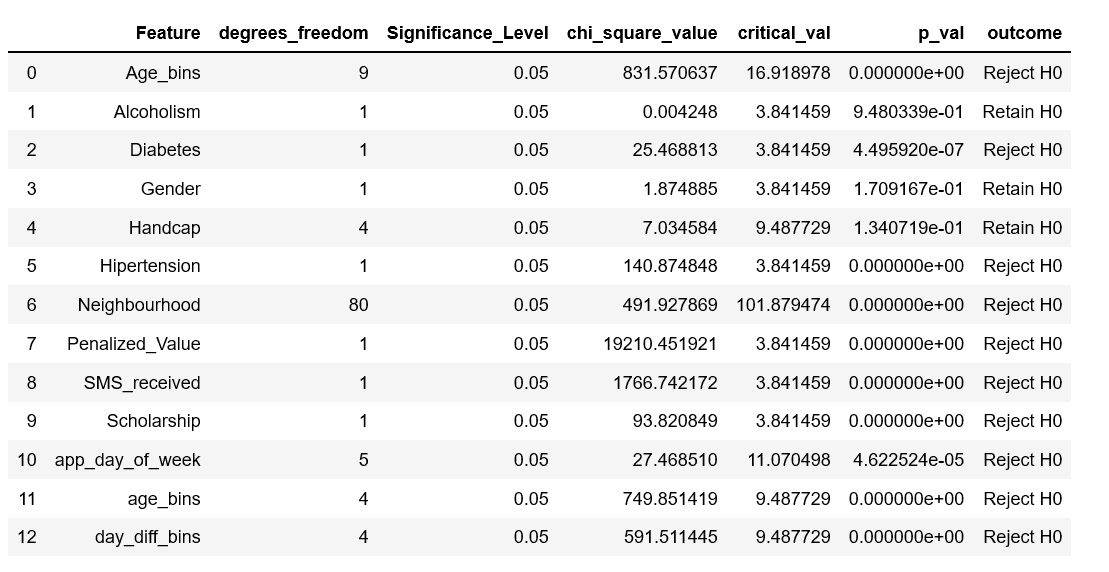

Hypothesis Testing: AppointmentID, Appointment and Scheduled day were either continuous numbers or dates, which would not have any impact on the study and hence we excluded them for modeling purposes. The PatientID was later used to map the results to identify the No-Show predictions of the model. A ChiSquare Test was performed on the rest of the variables at a significance level of 95% to determine whether the variables could be used as predictor variables.Null Hypothesis: The variables (predictor variable/response variable pair) were independent of each other.The results of the hypothesis test are shown in the table below.

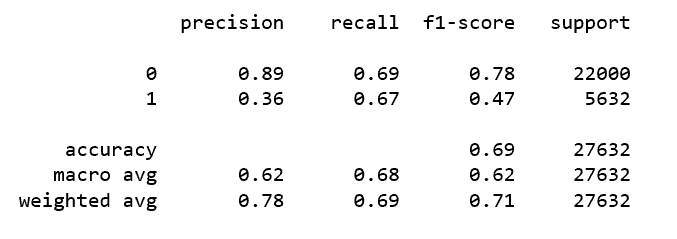

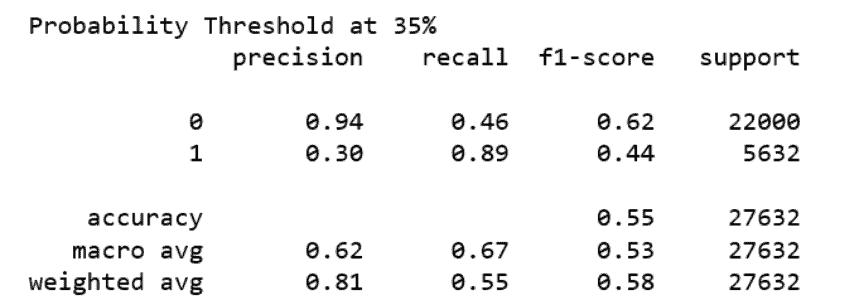

From the results, it is obvious that Alcoholism, Handicap and Gender had no impact on the response variable and hence were not included as model inputs. The remaining variables were used as inputs to the model.Training and Testing DataThe ratio of Yes:No (20:80) in No-Show indicates that there is an imbalance in the dataset. Best practices indicate that we need to either undersample the majority class or use a powerful algorithm (ensemble technique) to address the imbalance problem in the dataset. The current study split the dataset into training and testing set using a ratio of 75-25. Random Forest classifier, Logistic regression, Naive Bayes, support vector machine and a voting classifier were the algorithms used to model the input data. These models were subsequently validated using the test set. The Models that were used for this particular use case were Random Forest, Support Vector Machines, Naive Bayes and Logistic Regression. However, the best model was from the Voting Classifier, the results of which are provided below.<Voting Classifier ResultsImbalance Correction: In spite of using the models, the results seem to be on the lower side. So a voting classifier, which would combine the results of all the models, and produce the results based on a voting mechanism (majority votes for an input class is declared the winner) was used. Normally in an imbalance data precision / recall of positives might be too low. Changing the probability thresholds of the model is one way to enhance the results. So, we changed the probability thresholds of the voting classifier model. The results for different probability thresholds of the voting classifier are shown below.Model Result

ROC Curve

Probability Threshold Results for Voting Classifier

ConclusionWhile other models produced lower results, they concur with other reported studies. The No-Show data was imbalanced data and we had adopted changing the probability threshold as a method to counter the resultant lower recall for positives. The current study had implemented several models such as the Random Forest, Logistic Regression, Naive Bayes and Support Vector Machines and a Voting Classifier based on the above-mentioned models. It is observed that with a probability threshold of 0.35, the voting classifier was able to predict a high recall value of 89%. Neighborhood, Penalization for non-adherence and appointment day of the week had a major impact on the Appointment no-show when compared to other features.To know more about our software solutions for healthcare, talk2us@ideas2it.com or visit www.ideas2it.comAbout Ideas2IT,Are you looking to build a great product or service? Do you foresee technical challenges? If you answered yes to the above questions, then you must talk to us. We are a world-class custom .NET development company. We take up projects that are in our area of expertise. We know what we are good at and more importantly what we are not. We carefully choose projects where we strongly believe that we can add value. And not just in engineering but also in terms of how well we understand the domain. Book a free consultation with us today. Let’s work together.

.avif)

.png)

.png)

.png)

.png)

.png)