What is Spring Cloud Stream?

Spring Cloud Stream is a framework that decouples the implementation of Producer and Consumer irrespective of the messaging platform. It also enables us to switch to a different messaging broker by simply switching the binder.

You can find the list of available binders here

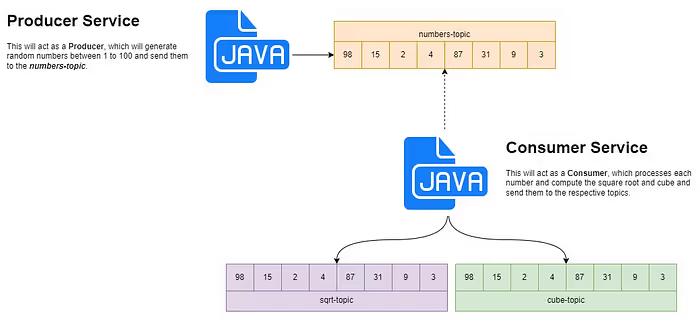

What are we gonna do?

We are going to create a simple stream processing application that will generate random numbers every second and put them into a Kafka topic called numbers-topic. And we will listen to this numbers-topic and do the below operations:

- Compute the square root of each number and put it into the sqrt-topic

- Compute the cube of each number and put it into the cube-topic

Pre-requisites:

- Basics of Java 8 function-based programming

- Spring

- Basics of Kafka

Setting up the environment

Install Kafka:

Please refer to the official documentation to set up Kafka using docker. Alternatively, you can use the docker-compose file from this demo project to up and run Kafka in your local

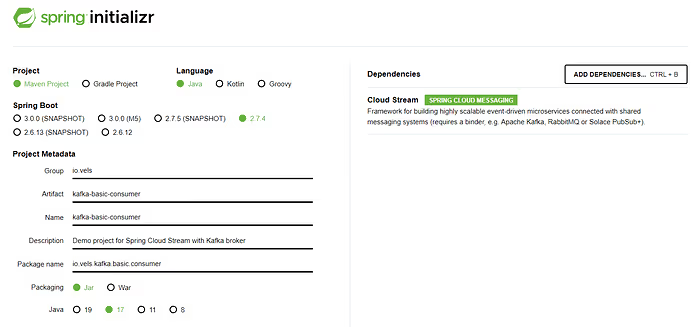

Generating Project:

I used https://start.spring.io to generate the structure of this project.

Once you generate the project, add the below binder dependency in the pom.xml — we will be using the Kafka binder in this example,

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-kafka</artifactId>

</dependency>

You can find the complete code in the below git repositories,

Producer service from here

Consumer service from here

Producer Service

To create a producer, we only need to create beans with a supplier.

Yes, we have created a Producer to send random numbers using the Java functional programming style.

Configuring Producer service

Let's jump to the configuration part, where we will see how to configure the Kafka topic name for the producer.

Since we are using Kafka, the destination should be the Kafka topic name, in this case, numbers-topic. And also, we have used the functional programming method for defining the producer, the binder name needs to be in a particular format.

<functionName> + -out- + <index>

Here the out refers to the producer. The index of the binder is typically set to 0. You can read more about the binder naming convention here

With the above-said configuration — in our project, we have to bind the supplier function numberProducer using the below format,

numberProducer-out-0

In this case, the supplier function is invoked by a default polling mechanism which is provided by the framework. It calls the supplier every second. We can usestreamBridge to send messages on demand which I will cover in the upcoming blogs.

Consumer Service

To create a consumer, we can either use Consumer or Function based on our needs. Let's implement both models in this project.

As you can see, we have a Consumer and two Function where the Consumer will act as an event listener. In this case, for each message from numbers-topic, the Function acts as a Listener and a Producer who listens to a topic and produces messages on the same or different topic based on the binder configuration. In this project, we are using different topics for each Function which you can see below:

- numberConsumer — Listen to our topic numbers and simply log the incoming message.

- consumeAndProcessSqrt — Listen to our topic numbers-topic and process the square root operation and simply return the computed result.

- consumeAndProcessCube — Listen to our topic numbers-topic and process the cube operation and return the computed result.

Configuring Consumer service

As discussed in the Producer section, the Consumer binder’s destination should also be the Kafka topic name. In this case, cube-topic and sqrt-topic are for different processors. Like the Producer configuration, the consumer has a particular format for binding the name, like below:

<functionName> + -in- + <index>

Here the in refers to the Consumer. The index of the binder is typically set to 0.

With the above-said configuration, in our project, we have to bind the Consumer function number. Consumer using the format number Consumer-in-0 and for the Function, we should have both in and out configuration since it deals with consumer and producer functionality,

consumerAndProcessSqrt-in-0 consumeAndProcessSqrt-out-0consumeAndProcessCube-in-0 consumeAndProcessCube-out-0

You can see that consumeAndProcessSqrt-out-0 and consumeAndProcessCube-out-0 have different destinations, sqrt-topic and cube-topic, respectively, which are based on our project needs.

Running the Project

Once the Kafka setup is completed, make sure you are able to access the control center at http://localhost:9021.

Now, run the Producer-service and Consumer-service applications with the help of your favorite IDE. Once the applications have started successfully, you should be able to see the stream of incoming data in the control center for our topics.

Conclusion

In this blog, we saw how we can use Spring Cloud Streams to send and receive messages from a Kafka topic. We discussed how to define the binders and then used the Kafka binder dependency to send messages to the Kafka broker.

Happy Learning!

About Ideas2IT:

Are you looking to build a great product or service? Do you foresee technical challenges? If you answered yes to the above questions, then you must talk to us. We are a world-class Custom dot net development company. We take up projects that are in our area of expertise. We know what we are good at and more importantly what we are not. We carefully choose projects where we strongly believe that we can add value. And not just in engineering but also in terms of how well we understand the domain. Book a free consultation with us today. Let’s work together.

.png)

.avif)

.avif)

.avif)

%20Top%20AI%20Agent%20Frameworks%20for%20Autonomous%20Workflows.avif)

%20Understanding%20the%20Role%20of%20Agentic%20AI%20in%20Healthcare.png)

%20AI%20in%20Software%20Development_%20Engineering%20Intelligence%20into%20the%20SDLC.png)

%20AI%27s%20Role%20in%20Enhancing%20Quality%20Assurance%20in%20Software%20Testing.png)

.png)

%20Tableau%20vs.%20Power%20BI_%20Which%20BI%20Tool%20is%20Best%20for%20You_.avif)

%20AI%20in%20Data%20Quality_%20Cleansing%2C%20Anomaly%20Detection%20%26%20Lineage.avif)

%20Key%20Metrics%20%26%20ROI%20Tips%20To%20Measure%20Success%20in%20Modernization%20Efforts.avif)

%20Hybrid%20Cloud%20Strategies%20for%20Modernizing%20Legacy%20Applications.avif)

%20Harnessing%20Kubernetes%20for%20App%20Modernization%20and%20Business%20Impact.avif)

%20Monolith%20to%20Microservices_%20A%20CTO_s%20Decision-Making%20Guide.avif)

%20Application%20Containerization_%20How%20To%20Streamline%20Your%20Development.avif)

%20ChatDB_%20Transforming%20How%20You%20Interact%20with%20Enterprise%20Data.avif)

%20Catalyzing%20Next-Gen%20Drug%20Discovery%20with%20Artificial%20Intelligence.avif)

%20AI%20Agents_%20Digital%20Workforce%2C%20Reimagined.avif)

%20How%20Generative%20AI%20Is%20Revolutionizing%20Customer%20Experience.avif)

%20Leading%20LLM%20Models%20Comparison_%20What%E2%80%99s%20the%20Best%20Choice%20for%20You_.avif)

%20Generative%20AI%20Strategy_%20A%20Blueprint%20for%20Business%20Success.avif)

%20Mastering%20LLM%20Optimization_%20Key%20Strategies%20for%20Enhanced%20Performance%20and%20Efficiency.avif)

.avif)

.avif)

.avif)

.avif)